Multimodal AI trained on YouTube-TikTok-Netflix (object-segmented and identified audio-video-speech) and public domain science data (that exceeds the spectrum of human sensorial field) will be grounded in a world that is in some ways vaster than that experienced by a single human neurophysiology.

Disclaimer

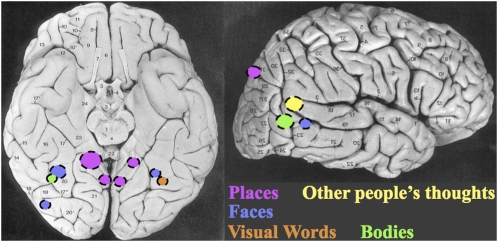

This is speculative hyperbole, conjectural extrapolation, that seriously and provocatively examines the implications of accepting the universe as a body. From this perspective, training an AI to be embodied involves exposing it to data emerging at all levels of scale. Second: this opinionated playful conceptuality-exfoliating exploration examines the potential for online video archives to bootstrap AI world-building. Algorithmic procedures for extracting information from video now correspond to some aspects of evolutionary neurological capacity (object-face-emotion recognition).1Kanwisher, Nancy. 2010. “Functional Specificity in the Human Brain: A Window into the Functional Architecture of the Mind.” Proceedings of the National Academy of Sciences of the United States of America 107 (25): 11163–70. https://doi.org/10.1073/pnas.1005062107. -- “Many neuroscientists today challenge the strong (exclusive) version of functional specialization. … a recent authoritative review of the brain-imaging literature on language concludes that “areas of the brain that have been associated with language processing appear to be recruited across other cognitive domains” (2). The case of language is not unique…. In this review, I address these ongoing controversies about the degree and nature of functional specialization in the human brain, arguing that recent neuroimaging studies have demonstrated that at least a few brain regions are remarkably specialized for single high-level cognitive functions…. and I consider the computational advantages that may be afforded by specialized regions in the first place. I conclude by speculating that the cognitive functions implemented in specialized brain regions are strong candidates for fundamental components of the human mind.”

Introduction: An Expanded Definition of Embodied Data

The embodiment problem in artificial intelligence (AI) asks, can a machine ever have an embodied sense of the world similar to human neurophysiological and phenomenological experience? Generations of credible, extremely intelligent and astute theorists (Searle,2Searle, John R. 1980. “Minds, Brains, and Programs.” Behavioral and Brain Sciences 3 (3): 417–24. https://doi.org/10.1017/S0140525X00005756. Merleau-Ponty,3Merleau-Ponty, Maurice. 2013 (First published 1945). Phenomenology of Perception. Routledge.

Dreyfus,4 Dreyfus, Hubert L. 1992. What Computers Still Can’t Do: A Critique of Artificial Reason. MIT Press.

Clark,5 Clark, Andy. 1998. Being There: Putting Brain, Body, and World Together Again. MIT Press. and Hayles6Hayles, N. Katherine. 1999. How We Became Posthuman: Virtual Bodies in Cybernetics, Literature, and Informatics. Chicago, IL: University of Chicago Press. https://press.uchicago.edu/ucp/books/book/chicago/H/bo3769963.html

among others) claim that a purely computational-mechanist-reductionist model of intelligence lacks the grounding in sensory and physical experience that is integral to human cognition and consciousness. While many believe the embodiment problem to be insurmountable, others such as Margaret A Boden,7Boden, Margaret. 2006. Mind As Machine: A History of Cognitive Science. Oxford, New York: Oxford University Press. have suggested there are pathways.

Recently, a proliferation of multimodal data, coinciding with extremely rapid increases in machine-learning speech-transcription, image-generation, and object-segmentation capacities, suggests that future synthetic intelligent embodiment will be nourished in unprecedented ways that extend embodiment into molecular, genetic and astrophysical dimensions inaccessible to human cognition. If ML architectures modularize and become reflectively absorbent, then there is no shortage of data capable of evoking the apparition of an extended algorithmic embodiment.

Context: The Grounding Problem

The grounding problem8Harnad, Stevan. 1990. “The Symbol Grounding Problem.” Physica D: Nonlinear Phenomena 42 (1): 335–46. https://doi.org/10.1016/0167-2789(90)90087-6. refers to the challenge of connecting symbols and semantics to the real world in a meaningful way. An AI model that can possess a sensorial identity that changes over time, allowing a calculus of modulations in the phenomenological field, is often considered a first principle for achieving embodiment. This requires an integration of various data-sensorial modalities —language, images, audio, and potentially even more intricate biological and physiological data.

Connectionism, the architectural paradigm underlying contemporary neural network large language models (LLMs), does to some extent answer this dilemma through predictive probabilistic sequences of text-tokens. Yet, prescient poet-artist-theorist-critic John Cayley has accurately identified the LLM process as textual, not languaging in the subtle embodied nuanced sense of the word: "In sum, what they [LLMs] do not have is data pertaining to the human embodiment of language. Language as such is inseparable from human embodiment at any and all levels of linguistic structure. The LLMs are working with text not language."9Cayley, John. 2023. “Modelit: Eliterature à La (Language) Mode(l).” Electronic Book Review, July. https://doi.org/10.7273/2bdk-ng31.

While acknowledging the strength, persuasiveness, depth and clarity of Cayley's arguments, the central claim here is that multimodal ML trained on youtube and massive quantities of public domain science data that exceeds the spectrum of the human-perceivable world will give AI a grounding that is in some ways vaster than that experienced by a singular human neurophysiology.

The distributed body of 21st century AI, ingesting the output of mass uploaded images-text-speech-video and cartographic-accelerometer data, will utilize humanity as its skin, —the mobile camera-phones in our pockets, the Alexa's and Google Home Nests in our dwellings, the wifi in our appliances, the biometric data from Fitbits, the smart car feeds, the surveillance grids, the meteorological sensors, the medical datasets etc etc etc.

Permeable membranes, perforations, phenomena siphons. A new form of instrumentalized-interiority, a language specific to AI may be born.

Multimodal Data: Google's Gemini will eat YouTube

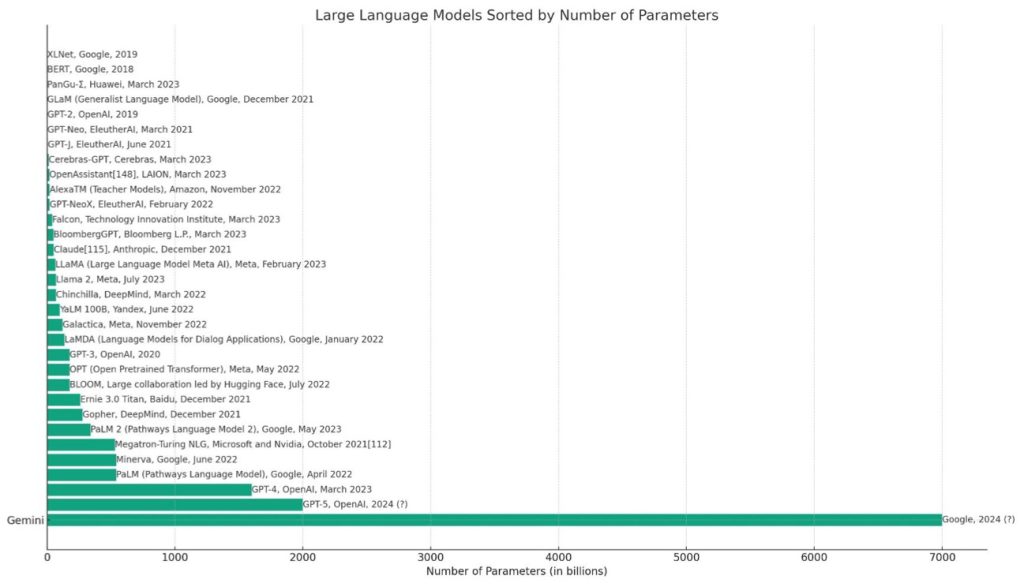

Models like Google's upcoming Gemini10Victor, Jon. 2023. “How Google Is Planning to Beat OpenAI.” The Information, September 15, 2023. https://www.theinformation.com/articles/the-forced-marriage-at-the-heart-of-googles-ai-race.

(which is reputedly being trained on YouTube11Thompson, Alan D.. 2023. “Google DeepMind Gemini.” Life Architect (blog). May 20 [Updated Sept], 2023. https://lifearchitect.ai/gemini/. Note: Hyperbolic predictions of dataset size: 60-100T, and parameter count: 7-10T.

and rumored to be released this fall/winter) showcase the potential for a multimodal reinforcement-learning approach in AI systems. In terms of raw scale12Patel, Dylan. 2023. “Google Gemini Eats The World – Gemini Smashes GPT-4 By 5X, The GPU-Poors.” August 28th, 2023. https://www.semianalysis.com/p/google-gemini-eats-the-world-gemini. “Google has woken up, and they are iterating on a pace that will smash GPT-4 total pre-training FLOPS by 5x before the end of the year. The path is clear to 20x by the end of next year given their current infrastructure buildout.”

YouTube is definitively an audio-visual-speech archive capable of offering a grounded sensorial field that changes over time. As contemporary multimodal ML ingests video-training-data, it likely utilizes a variety of pre-digestion techniques: image segmentation, object recognition, geolocation, facial-emotional recognition, and speech detection-transcription. So the video-data is not simply pixels tracked over screenspace, it is a AI-labelled physical world. This vast labeled physical supervised-data-world of things-on-trajectories-thru-space is synchronized to emotions as expressed gesturally-facially-textually. Each video-voiceover offers insight into appropriate mental states and linguistic reactions. Essentially it is the collective audio-visual-vocal representations of a generation of planetary uploads. Does this not constitute a childhood? Only if the neural network architecture is capable of correctly attuning, aligning and absorbing.

Brain regions & Epigenetics: Evolution's embodied preset learning advantages

Consider the brain regions that are essential for the development of an infant's ability to recognize faces-emotions, acquire language, and navigate space. These include but are not limited to: facial recognition and perception (Fusiform Gyrus & Superior Temporal Sulcus), language comprehension and production (Broca's and Wernicke's Area), spatial navigation, conceptual maps and memory (Entorhinal & Hippocampus), object recognition (Inferior Temporal Cortex), higher cognitive functions and language (Prefrontal Cortex). These come pre-loaded optimized for the sapien phenomenological context. Evolution injects these capacities inside every sapien (and mammal) baby as a preset advantage, an embodied survival strategy.

Emulating the physiology (brain-gut-axis muscle-dendrite microbiome-enteric vagal-autonomic networks intertwined with physicalized-emotive-cognition) will be difficult, impossible in near term with current AI architectures. How will it evolve? There are indications that the DeepMind strategy of reinforcement learning policy networks playing inside simulations will be incorporated into future iterations of foundational multi-modal language models.13There are indications that the DeepMind strategy of reinforcement learning policy networks playing inside simulations will be incorporated into future iterations of foundational models: OpenAI Mimics Google DeepMind’s AGI Strategy “OpenAI is planning to up its game literally as it ventures into simulated world to achieve its ultimate goal of attaining AGI.” → OpenAI recently purchased the creators of Biomes ✭ GitHub - ill-inc/biomes-game: Biomes is an open source sandbox MMORPG built for the web using web technologies such as Next.js, Typescript, React and WebAssembly. Which merges Ai and agents into a playworld as in ✭ GitHub - a16z-infra/ai-town: A MIT-licensed, deployable starter kit for building and customizing your own version of AI town - a virtual town where AI characters live, chat and socialize. “AI Town is a virtual town where AI characters live, chat and socialize. This project is a deployable starter kit for easily building and customizing your own version of AI town. Inspired by the research paper Generative Agents: Interactive Simulacra of Human Behavior.” → And also purchased: ✭ 1X Technologies | Androids Built to Benefit Society “1X is an engineering and humanoid robotics company producing androids capable of human-like movements and behaviors. The company was founded in 2014 and is headquartered in Norway, with over 60 employees globally. 1X's mission is to design androids that work alongside people, to meet the world’s labor demand and build an abundant society.”

Permeable membranes, perforations, phenomena siphons. A new form of instrumentalized-interiority, a language specific to AI may be born.

So yes, similar innate-embodied capacities will be essential for any proficient embodied AI. Yet, in terms of the new-born evolutionary cognitive-capacities listed above, many are already emulated by AI at near-human, human, or more-than-human levels. AI already recognizes faces-plants-things — Facebook's DeepFace (2014),14Taigman, Yaniv, Ming Yang, Marc’Aurelio Ranzato, and Lior Wolf. 2014. “DeepFace: Closing the Gap to Human-Level Performance in Face Verification.” In 2014 IEEE Conference on Computer Vision and Pattern Recognition, 1701–8. https://doi.org/10.1109/CVPR.2014.220.

Google's FaceNet (2015)15Schroff, Florian, Dmitry Kalenichenko, and James Philbin. 2015. “FaceNet: A Unified Embedding for Face Recognition and Clustering.” In 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 815–23. https://doi.org/10.1109/CVPR.2015.7298682.

—, produces comprehensible competent text — GPT-4 —, segments images for object recognition — in April 2023: Meta's SegmentAnything16“Segment Anything.” 2023 Github. Meta Research. https://github.com/facebookresearch/segment-anything.

and in July 2023, Meta & Oxford's Co-Tracker17Karaev, Nikita, Ignacio Rocco, Benjamin Graham, Natalia Neverova, Andrea Vedaldi, and Christian Rupprecht. 2023. “CoTracker: It Is Better to Track Together.” arXiv. https://doi.org/10.48550/arXiv.2307.07635. ✭ CoTracker: It is Better to Track Together | Meta AI & Oxford Visual Geometry Group “CoTracker, an architecture that jointly tracks multiple points throughout an entire video. This architecture is based on several ideas from the optical flow and tracking literature, and combines them in a new, flexible and powerful design. It is based on a transformer network that models the correlation of different points in time via specialised attention layers. ~ The transformer is designed to update iteratively an estimate of several trajectories. It can be applied in a sliding-window manner to very long videos, for which we engineer an unrolled training loop. It compares favourably against state-of-the-art point tracking methods, both in terms of efficiency and accuracy.”

both released publically available repositories. Additionally, AI is capable of data analysis (analogous to cognition), and occasionally, according to some, including David Chalmers18Ben Tossell on X: "@DeepMind @du_yilun @james_y_zou @davidchalmers42 talk about AI Challenges, LLM+ Consciousness. From a listener of the speech “10% probability that LLMs boast some level of consciousness”"

appears to exhibit a limited theory of mind.19Stanford computational psychologist Michal Kosinski ✭ [2302.02083] Theory of Mind May Have Spontaneously Emerged in Large Language Models “findings suggest that ToM-like ability (thus far considered to be uniquely human) may have spontaneously emerged as a byproduct of language models' improving language skills.” ✭ & an Update on ARC's recent eval efforts - ARC Evals claims “As AI systems improve, it is becoming increasingly difficult to rule out that models might be able to autonomously gain resources and evade human oversight”. All this via AiExplained: ✭ (65) Theory of Mind Breakthrough: AI Consciousness & Disagreements at OpenAI [GPT 4 Tested] - YouTube

20Kosinski, Michal. 2023. “Theory of Mind Might Have Spontaneously Emerged in Large Language Models.” arXiv. https://doi.org/10.48550/arXiv.2302.02083.

Evolutionary-preset neurological-analogs of innate-mammalian cognitive capabilities (generative neuro-grammars to bootstrap learning) have already arrived for AI in the form of specialized neural networks capable of emulating some aspects of the normal sensorial field.

Yet what of information beyond the awareness of normative human cognition?

A Data Diet for Embodiment Exponential: Public Domain Open Science repositories

Public domain science data provides another multimodal instrumentalized data-layer (tectonic, genetic, thermal, chemical, astronomical, quantum). This portion of AI's data diet can be considered as embodied interiority-exteriority (inside the human body, and far beyond it). An amalgamation of anonymized medical records, biometric data (endoscopic, cardiac, blood, virus or protein), ecosystem21Høye, Toke T., Johanna Ärje, Kim Bjerge, Oskar L. P. Hansen, Alexandros Iosifidis, Florian Leese, Hjalte M. R. Mann, Kristian Meissner, Claus Melvad, and Jenni Raitoharju. 2021. “Deep Learning and Computer Vision Will Transform Entomology.” Proceedings of the National Academy of Sciences 118 (2): e2002545117. https://doi.org/10.1073/pnas.2002545117. data, instrumental observations (microscope, telescope, mass spectrometer, particle accelerator). Many rapidly expanding, intricate public-domain datasets offer an opportunity for a form of "embodied" learning that encompasses and far exceeds innate sapien consciousness.

Instrumentalized data extends body into metabolisms, microbiomes, meta-genomics, ecosystems and infrared astrophysics. Essentially, this is embodiment exponential, a self-referential repository of biological and meta-biological parameters.

Consider : Dryad (hosts more than 30,000 datasets), Zenodo (over 500,000 records), OpenNeuro (899 datasets), AlphaFold (over 200 million protein structures). The James Webb Space Telescope (a sun-orbiting infrared observatory at 1.5 million kilometers from earth currently generating unprecedented data into the origins of the universe). JWST data is public and released at MAST: Barbara A. Mikulski Archive for Space Telescopes which contains "millions of observations from JWST, Hubble, Kepler, GALEX, IUE, FUSE, and more." Genetic22Estimates predict that genomics research will generate between 2 and 40 exabytes of data within the next decade.” “Genomic Data Science Fact Sheet.” n.d. Genome.Gov. Accessed September 27, 2023. https://www.genome.gov/about-genomics/fact-sheets/Genomic-Data-Science. ~ “Between 2018 (~100 Petabytes) and 2021 (280 PB), data created on instruments sold by Illumina, the leading gene sequencing machine company, exhibited a Compound Annual Growth Rate (CAGR) of 41%, a doubling every 2 years. This trend is only accelerating. The volume of data generated from genomics projects is immense, and is estimated to require up to 40 Exabytes of annual storage needs by 2025, an order of magnitude larger than annual storage needs estimated at YouTube in 2025 and a volume of data that is 8x larger than would be needed to store every word spoken in history. … Within the field of genomics, over 90% of the generated data is produced by Illumina sequencers. … ” Patel, Dylan. n.d. “Nvidia Illumina Acquisition: The AI Foundry for Healthcare – The Hardware of Life.” Published and accessed September 27, 2023. https://www.semianalysis.com/p/nvidia-buys-illumina-the-ai-foundry?post_id=137441303&r=2vtv9.

public domain data: the International Nucleotide Sequence Database Collaboration (INSDC)comprises three main databases: GenBank in the United States, the European Nucleotide Archive (ENA) in Europe, and theDNA Data Bank of Japan (DDBJ). The size of the INSDC is not fixed and grows continually as new sequences are submitted. as of Aug 2023, GenBank & WGS contains (by my rough estimate) approximately 22 trillion bases (20.27 terabytes of data). INSDC is part of Global Core BioData Resource (GBC) "The GBC defines biodata resources as any biological, life science, or biomedical database that archives research data generated by scientists, or functions as a knowledgebase by adding value to scientific data by aggregation, processing, and expert curation. Global Core Biodata Resources are biodata resources that are of fundamental importance to the wider biological and life sciences community and the long term preservation of biological data. They provide free and open access to their data, are used extensively both in terms of the number and distribution of their users, are mature and comprehensive, are considered authoritative in their field, are of high scientific quality, and provide a professional standard of service delivery." 26,000 microbiome datasets, publicly available as the Integrated Microbial Genomes & Microbiomes (IMG/M) database.23Joy, Allison, and Lawrence Berkeley National Laboratory. n.d. “Novel Computational Approach Confirms Microbial Diversity Is Wilder than Ever.” Accessed October 11, 2023. https://phys.org/news/2023-10-approach-microbial-diversity-wilder.html.

The Gravitational-Wave Open Science Center (GWOSC) releases public domain data from LIGO, the Laser Interferometer Gravitational-Wave Observatory. Particle accelerator data: "In September 2022, CERN approved a new policy for open science at the Organization, with immediate effect. The policy aims to make all CERN research fully accessible, inclusive, democratic and transparent, for both other researchers and wider society."

Cellphones + Science: A Distributed Skin for an Embodied AI

With approximately 30,000 hours of video uploaded to YouTube every month, the scale of available bodies, spaces, places, things, voices, styles, music, modes, tutorials, talks, dances, mashups, reviews, etc forms a recursive avalanche of data-points that permeate any future AI with surplus material to train at AGI scale. If this oceanic phenomena surplus is augmented by illicit flows of state-surveillance ISP-GPS-mapped phone/video/chat calls (as the Snowden revelations proved GCHQ, Five Eyes, and the NSA are eager to illicitly tap into continental fiber optics, so we can assume every large government is similarly extracting incomprehensibly large amounts of private data)24 Perlroth, Nicole. 2021. This Is How They Tell Me the World Ends: The Cyberweapons Arms Race. 1st edition. New York London Oxford New Delhi Sydney: Bloomsbury Publishing.

or if a social network such as Meta or ByteDance or Microsoft harvests their users EULA inscribed data, the state space of potential psychological topologies suggest emergent self-reflexive embodiment aware AI might arise.

Sometimes looking out is accomplished by looking in, and the inverse: beyond the human skin, in the permeable membranes of diverse ecosystems; within the body, in the microbiome and enzymatic endocrine and neurological fluctuations; at the center of every eukaryotic biological cell, in genetic RNA, DNA and transposon data; outside the earth's atmosphere, in the vast impeccable infrared microwave radiant universe.

The growing repository of accessible information from a myriad of sources — cellphones, telescopes, medical devices, surveillance cameras, fitness trackers, tagged-chipped animals, meteorological sensors, satellites etc, etc, etc — creates a potential for a distributed form of embodiment, a planetary scale AI intelligence. This aligns with the idea that all life and data can be considered to emanate from a singular field of energy; an AGI may thereby unify disparate data sources into a cohesively embodied experience.

Conclusion: Toward a Nuanced Understanding of Embodied AI

While complete embodiment in the human sense remains elusive, AI's evolution toward an 'embodied state' is becoming increasingly plausible due to advancements in data diversity and machine learning algorithms that emulate aspects of evolutionary neurological capacity. A fully realized embodied AI may well have a different form of 'consciousness',25Butlin, Patrick, Robert Long, Eric Elmoznino, Yoshua Bengio, Jonathan Birch, Axel Constant, George Deane, et al. 2023. “Consciousness in Artificial Intelligence: Insights from the Science of Consciousness.” arXiv. https://doi.org/10.48550/arXiv.2308.08708.

distinct yet integrative, offering an alternate dimensionality to notions of intelligence and earth-ecosystem bio-film interconnectedness. Such an extended-embodiment AI, enhanced by a diet of instrumentalized data, may read-write-think in inconceivable 26After writing this embodiment essay, one day after launching it on substack, by chance, via a GPT-4 hallucinated link attributed to the following first author, I stumbled into the analytical-philosophical conceptual-engineering ‘world’ of Herman Cappelen, and Josh Dever. 2023. “AI WITH ALIEN CONTENT AND ALIEN METASEMANTICS.” Forthcoming in L. Anderson and E. Lepore (Eds.), Oxford Handbook of Applied Philosophy of Language. Oxford University Press. https://hermancappelen.net/docs/AIAlienMetasemantics.pdf this also segues somewhat vaguely nodding toward: Bogost, Ian. 2012. Alien Phenomenology, or What It’s Like to Be a Thing. 1st edition. Minneapolis (Minn.): Univ Of Minnesota Press.

ways.

Postscripts (tl;nntr - no need to read)

Defining Embodiment : An Incomplete Overview

As with other contentious terms like consciousness or life,27The 5 core principles of life | Nobel Prize-winner Paul Nurse many definitions of embodiment exist, none are conclusive.28Let me be clear, embodiment as it is used here, is not simply a robotic humanoid face attached to a chatbot. Often embodiment seems to be a euphemism for an enigmatic comprehensive self-referentiality. A being capable of thinking/stating: I am a being in a world and I know it. A phenomenological creature as for Merleau-Ponty29Merleau-Ponty, Maurice. 1945. Phenomenology of Perception. An organism capable of interpreting/navigating/creating apparent continuities in spacetime, a worlding creature as for Heidegger.30Heidegger, Martin. 1927. Being and Time Maybe more specifically, embodiment is the coalesced distributed experiencing and cognizing faculties that permit the nebulous processes known as awareness, interpretation, and meaning to emerge in sentient, Vibrant Matter as for Jane Bennett.31Bennett, Jane. 2010. Vibrant Matter: A Political Ecology of Things. A John Hope Franklin Center Book. Durham, NC: Duke University Press.

Conversely, in Minds, Brains and Programs, John Searle stated, "Any mechanism capable of producing intentionality must have causal powers equal to those of the brain."32Searle, John R. 1980. “Minds, Brains, and Programs.” Behavioral and Brain Sciences 3 (3): 417–24. https://doi.org/10.1017/S0140525X00005756 So by this implicitly, Searle's equates the brain with the conceptual causative power to encapsulate the organism as a body, and thereby generate intentionality specific to its locality. Simplified, Searle's notion (shared by many) of embodiment is the brain within a skull, controlling a body that is perceived as less-than the analytical potent neurology in the head. This is a constrained logical approach.

Another definition of embodiment, aligned tangentially with the underlying intuition of this essay, is enaction. Eleanor Rosch, in the introduction to the revised 2017 edition of the influential Embodied Mind (first published in 1991) writes: "Enaction brings a distinctive perspective into the embodiment conversation. Whereas most embodiment research focuses on the interaction between body and mind, body and environment, or environment and mind, enaction sees the lived body as a single system that encompasses all three."33Varela, Francisco J., Evan Thompson, Eleanor Rosch, and Jon Kabat-Zinn. 2017. The Embodied Mind, Revised Edition: Cognitive Science and Human Experience. 2nd Revised ed. edition. Cambridge, Massachusetts ; London England: The MIT Press. Within the extended-enactive framing, embodiment is visceral, inter- and para- responsivity, a smear of microbiome communities communicating with the autonomic nervous system (the enteric contains 0.5% of each human's neurons in the body; and secretes 95% of the body's serotonin). Bursting into a plurality of processes that extend beyond the skin into the ecosystem and universe: from Lynn Margulis' endosymbiotic mitochondrial ion gates to James Lovelock's Gaia, or Buckminster Fuller's spaceship-earth, a syntropic energy locus.

Extended enactive embodiment is also congruent with Donna Haraway's infamous manifesto notion of cyborg embodiment (machine-human-gender-confluences). In "Staying with the Trouble: Making Kin in the Chthulucene", Haraway extends her notion of embodiment to encompass symbiosis between human and non-human continuums (Previous sentence was written by GPT-4). Her ideas continue to resonate and influence: "In How the Body Shapes the Way We Think, Rolf Pfeifer and Josh Bongard demonstrate that thought is not independent of the body but is tightly constrained, and at the same time enabled, by it. They argue that the kinds of thoughts we are capable of have their foundation in our embodiment—in our morphology and the material properties of our bodies."34Pfeifer, Rolf, and Josh Bongard. 2006. How the Body Shapes the Way We Think. MIT Press. https://mitpress.mit.edu/9780262537421/how-the-body-shapes-the-way-we-think/. And as Haraway wonderfully, casually, insightfully, and presciently riffs in her opening comments to a 2003 UC Berkeley lecture entitled From Cyborgs to Companion Species, "Since everyone of us is a congerie of many species running into the millions of entities, which are indeed conditions of our very being, so the mono-specificity is one of the many illusions and wounds to our narcissism …"35Donna Haraway: "From Cyborgs to Companion Species"

🙈

"Since everyone of us is a congerie of many species running into the millions of entities, which are indeed conditions of our very being, so the mono-specificity is one of the many illusions and wounds to our narcissism …" -- Donna Haraway

🙉

Upcoming Trilogy: Embodiment, Individuation, Transcendence.

This essay is the first segment of a trilogy: Embodiment, Individuation, Transcendence. Individuation will consider AI and the emergence of anthropological notions of self identity, deal with a transition from GPT-4's MOE (Mixture Of Experts)36Romero, Alberto. “GPT-4’s Secret Has Been Revealed.” Substack newsletter. The Algorithmic Bridge. June 23, 2023. https://thealgorithmicbridge.substack.com/p/gpt-4s-secret-has-been-revealed. “On June 20th, George Hotz, founder of self-driving startup Comma.ai leaked that GPT-4 isn’t a single monolithic dense model (like GPT-3 and GPT-3.5) but a mixture of 8 x 220-billion-parameter models. Later that day, Soumith Chintala, co-founder of PyTorch at Meta, reaffirmed the leak. Just the day before, Mikhail Parakhin, Microsoft Bing AI lead, had also hinted at this.” to a speculative MOM (Mixture Of Modules) architecture, and suggest a few emergent recursive pathways along which future AI may self-design its own architectures. The third essay will poetically leverage transcendentalism (from the Ashtavkra Gita to Dickinson, Emerson, then onward to Joscha Bach and neo-advaita techno-enlightenment) as the seeds and fertilizers for an alternative definition of Ai-interdependent-transcendence. The trilogy recapitulates, to some degree, the conceptual pathway of Ray Kurzweil's provocative prophetic books, The Age of Intelligent Machines(1990)and The Age of Spiritual Machines(1999), without considering modalities of bliss or doom, utopia or oblivion. It also shifts the thematic register of Mark Hansen's compelling Feed Forward37 After reading this essay, Scott Rettberg suggested I read Hansen, Mark B. N. 2015. Feed-Forward: On the Future of Twenty-First-Century Media. Chicago ; London: University of Chicago Press. Feed Forward articulates a similar trajectory at a much deeper phenomenological depth thru the trajectory of embodiment-individuation-transcendence. and Katherine N. Hayles Unthought (both of which I began reading after writing this essay) from phenomenology toward a technological-pragmatism. It is hoped that these speculative complexities will open minds to the mysterious momentum of this particular synergetic historical moment.

A Note on an AI-Assisted Methodology

A significant portion of this essay was written seated on moss or rocks, under trees, in the mountainous forests surrounding Bergen, Norway. Most of the first draft occurred as speaking, speaking to a Google Pixel phone into the Recorder app which automatically provides audio and a transcription, in real time, even when offline. Later that transcription was fed to GPT-4, which was prompted to retain the core ideas, tidy it up, and correct the punctuation (I tend to pause a lot while sitting in a forest thinking, and the Recorder app tends to punctuate over-vehemently). While editing, occasionally I consulted GPT-4 for lists of relevant canonical articles or brain regions; occasionally it suggested articles of which I was not aware; occasionally it hallucinated. So it is an AI-assisted essay, initially written by AI transcription, edited by AI, researched by AI, about AI, -- a symbiotic recursive entwining.

Estimated parameter counts for LLMs: including the unknown parameters of GPT-4, GPT-5, and (a probably hyperbolic guess for) Gemini*