Marino and Berry discuss their engagement in weekly conversations about the nature of "code, of ELIZA, its descendants" and how each of these programs have circulated within our critical code culture, along with other "contemporary conversation agents like Siri and ALEXA and, of course, ChatGPT."

Critical Code Studies: 10 years later

Almost 20 years ago, Mark Marino’s Critical Code Studies manifesto in electronic book review called for scholars to explore the extra-functional significance of computer source code in a new field he called Critical Code Studies (Marino, 2006a). After eight biannual working groups, several books, and a special issue of Digital Humanities Quarterly in 2023, not to mention the eponymous manuscript from MIT Press, Critical Code Studies as an approach to algorithms, software and code is thriving (Marino, 2020; Marino and Douglass, 2023). The original essay has been inspirational for a range of practices based in digital media and media archaeology, science and technology studies and digital humanities, eliciting code critical approaches tied to social concerns, from feminisms to queer and trans studies to race studies to post-colonialism and indigeneity. An international community of scholars from many disciplines have worked individually and collaboratively to explore the meaning of code. The working groups, the collaborative projects (from 10 PRINT to Reading Project to “Entanglements”), and the Humanities and Critical Code Studies Lab have underscored the necessity of diversity in the methods, identities of readers, and theoretical frameworks for the analysis and interpretation of code (Montfort et al., 2013). So far much of the work in CCS has been concerned with source-code of a more traditional kind, particularly procedural or object-oriented code, made up of discrete components and tending to be represented as text files. But, with the rise of AI/ML this opens up a new and challenging frontier for CCS and the stakes are made higher due to the difficulty of understanding the new “code” of, for example, Large Language Models, when there appears, on the surface no code at all. One approach to engaging with this new problematic is to examine historical examples of artificial intelligences, and so we have begun to apply the close-reading techniques of CCS to the recently unearthed original source code of the chatbot created by Joseph Weizenbaum in 1966 known as ELIZA1See http://findingeliza.org (Weizenbaum, 1966). Developed at a time of unrestrained development of artificial intelligence, not unlike today, ELIZA (and its code) has much to say to the contemporary conversation about AI.

ELIZA is a system for conversational human-computer interaction. ELIZA was named for George Bernard Shaw’s Eliza Doolittle from his sociolinguistic satire Pygmalion, though more directly from the cinematic musical adaptation My Fair Lady (released in 1964). Like its namesake, the ELIZA system can be instructed (or encoded) to speak after the fashion of all sorts of interactors (Weizenbaum, 1966, 1976b). Most people know ELIZA through (and as) its most famous persona, DOCTOR, with which the system is often conflated. DOCTOR is a script that “runs” on ELIZA and which performs a simplified version of Rogerian psychoanalysis, asking questions and reflecting back answers in a clever pattern matching and transformation system. A script creates a conversational persona on the ELIZA system.

Since Weizenbaum published his famous article describing ELIZA, people have been fascinated by this software and the possibilities it appeared to suggest about conversational human-computer interaction (Turkle, 1997). Indeed, the talking computers of science fiction, such as Hal in 2001: A Space Odyssey and more recent film examples such as Samantha in Her as well as contemporary conversation agents like Siri and ALEXA and, of course, ChatGPT all owe some inspiration to this first chatbot. ELIZA has been hugely influential, so much so that we call the tendency of humans to assign personhood or sentience to conversational programs. Indeed, ELIZA was critical to administering a “shock” to Joseph Weizenbaum, who after observing people’s attribution of intelligence to the system, what we now call the ELIZA Effect, turned towards developing social critiques of artificial intelligence becoming a “heretic” within his own department at MIT. As he became a vocal opponent to the race for artificial intelligence, he became persona non grata in the field he helped create, detailing his concerns about its detriment to humanity in Computing Power and Human Reason (Weizenbaum, 1976).

Our current critical code studies examination of ELIZA allows us to see more deeply the environment within which Weizenbaum was working, the detail of his development process, and his innovative solutions to material constraints, all of which complicates the history of this famous code artifact – from evidence for at least five quite different major versions of ELIZA, to its use as an educational tool to teach poetry and mathematics. Through our examination, we have come to know ELIZA as a family of programs that not only runs scripts of different personas or tasks roles, but also serves as a tool for the programmer, as a writer, as well as a programmer, to make edits within a given script while conversing. We offer a preview of those findings here, document further on our website Finding Eliza, and have a forthcoming book through MIT Press that pursues the code further.

ELIZA has been discussed in books from Turkle’s Life on the Screen to Janet Murray’s Hamlet on the Holodeck to Noah Wardrip-Fruin’s Expressive Programming (Turkle, 1997; Murray, 1998; Wardrip-Fruin, 2009). However, until now, we have not had the actual source code for the ELIZA system itself. Drawing on a range of disciplines, from digital humanities, media, philosophy, computer science and history we have undertaken a critical case study through a critical code study of this software. The ELIZA team has been working together formally since 2020, but many of our individual connections to ELIZA go back decades.

Critical Code Studies as Fieldwork.

ELIZA and DOCTOR have been an object of interest for Critical Code Studies since its inception. In fact, attempts to interpret chatbots inspired CCS itself. While Marino was writing his dissertation on conversation agents (2006b), he wanted to perform what Lev Manovich and N. Katherine Hayles call “media specific analysis” on these objects and he decided that would require examining the source code (Hayles, 2002; Manovich, Malina and Cubitt, 2002). However, the original source code for ELIZA was not openly available, only the script and many alternative implementations.

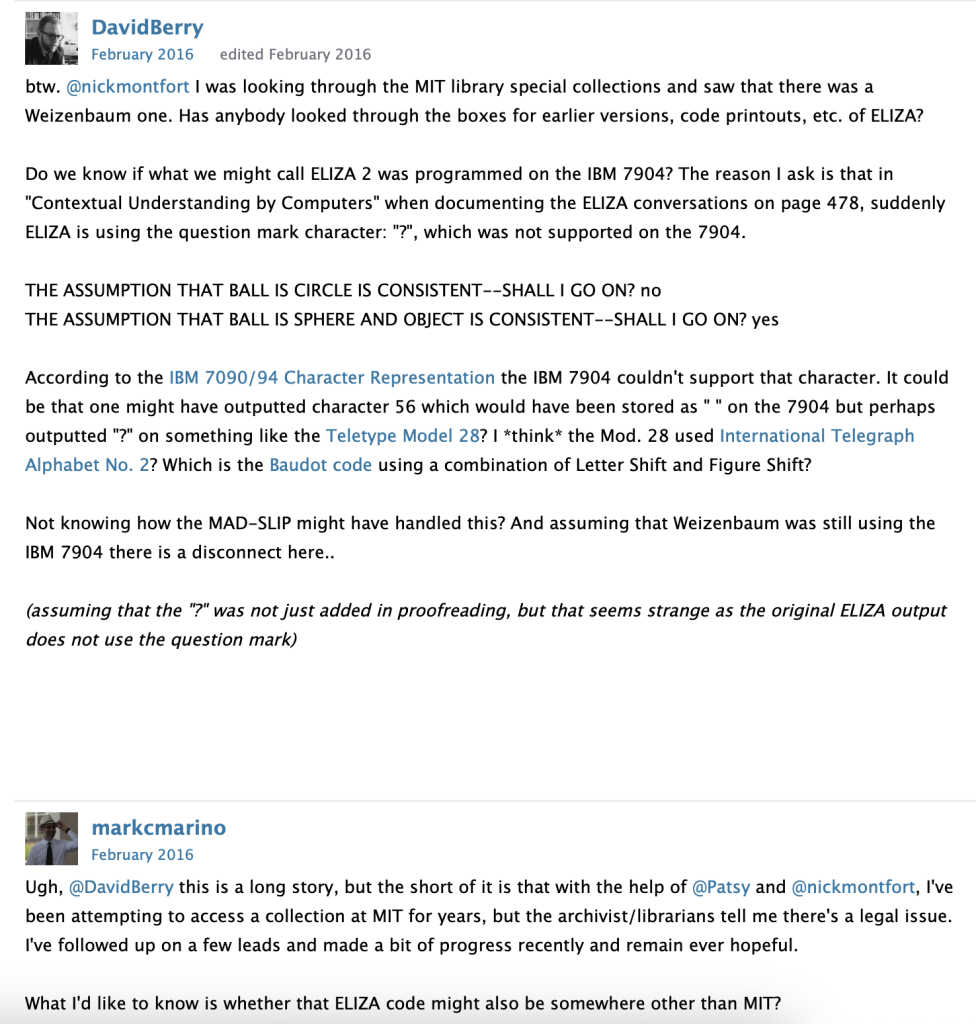

In 2016 and then again in 2022 Berry was asked to facilitate a discussion about ELIZA at Critical Code Studies Work Groups organized by Marino and Jeremy Douglass. This working group was titled Archaeologies of Code: Reading ELIZA and aimed to undertake a collective close reading of the ELIZA system together with contextualizing its historical significance. But it became quite clear during the discussion that the original source code appeared to have been lost and with its loss a crucial piece of the history of AI was lacking (Berry et al., 2023).

Only the algorithm described in Weizenbaum’s 1966 ACM article remained, along with many code re-implementations over the subsequent 50 years, and of which the authors confirmed that they hadn’t seen the original source code when writing, including Bernie Cosell’s Lisp version, “Doctor” and Shrager’s Basic version, “Eliza”. Much has been written about the DOCTOR script, but scholars have only had access to Weizenbaum’s diagram of the ELIZA system. Therefore, at that time, scholars could only comment on the abstraction of the algorithm, not the implementation, the details of the code itself.

When Shrager contacted Berry in 2021 a few years after the 2016 CCSWG, they began to wonder again about the location of the ELIZA source code. Thanks to 10 PRINT collaborator and research librarian Patsy Baudoin, Marino had a lead on a box at MIT in the Weizenbaum archives and Berry told Shrager about the possible chance of the code being located in the Weizenbaum archives at MIT. During a Zoom call during Covid with MIT archivist Myles Crowley, they were able to open a key box. Remarkably a version of the ELIZA source code was tucked inside one of the files printed on its original fanfold paper. This ELIZA was written in an obscure programming language called MAD-SLIP and was only 411 lines long. As reading the code required an expert in SLIP, Shrager also enrolled Arthur Schwarz, the creator of the GNU implementation of SLIP to assist the team.

Assembling the Team

One of the key elements for exploring the complex code of an artifact like ELIZA is having a team with sufficient technical and critical skills for interpreting it. Jeff Shrager had firsthand insights into ELIZA and its early adaptations. Through his work at Stanford, he had access to a key realm of Weizenbaum’s history combined with a lifelong interest in the history of computing. David M. Berry brought insight into the philosophy of software and a critical theory of the digital. As an experienced programmer, Anthony Hay brought expertise in code, eventually creating a faithful reproduction of ELIZA in C+ and later in JavaScript. Another lifelong programmer, Arthur Schwarz brought a fluency in SLIP and a wealth of knowledge of the culture of programming at the time of ELIZA’s emergence. Mark Marino brought expertise in critical code studies, a set of methods for interpreting the code. Having built her own novel chatbot, MrMind, artist Peggy Weil could contribute insights gleaned from a global online conversation conducted over 16 years, about what makes us human. Peter Milican brought additional expertise in the philosophy of computing together with long experience in developing an ELIZA clone called Elizabeth. And lastly, Sarah Ciston brought her research into critical AI as well as insights from making her chatbot ladymouth, which responds to misogynistic Reddit posts with passages of Feminist theory.

Since the original meeting, we have continued our critical code studies work by visiting the Weizenbaum archives at MIT as well as additional archives at UCLA and Stanford. We have interviewed those who have worked on Project MAC and others who knew Weizenbaum. We have also examined the documentation of ELIZA and MAD-SLIP to piece together the design, development and implementation of the software. We set out to perform a close reading of the piece, following the model of previous collaborations, such as, 10 PRINT CHR$(205.5+RND(1)); : GOTO 10 (Montfort et al., 2013), the collaborative reading of a single line of code; Reading Project (Pressman, Marino, & Douglass 2015), a collaborative reading of one work of electronic literature; and “Entanglements” (Marino, Leong, & Pressman, 2022), a collaborative reading of an interactive installation work.

With any project, reading the code is a complex and iterative process. With ELIZA, though the code is just a few hundred lines, the paratexts are extensive, from directly related materials, such as the programming manual for SLIP and transcripts of conversations, to more peripheral materials, such as interviews and written correspondence with Weizenbaum. We have collectively read through the code in online video chats, moving line by line through the code. Sometimes we would only make it through five lines in one hour due to questions fragments of the code would throw up in terms of its design and hidden technical functioning. This process is a hermeneutic spiral of learning because code inspires conversation and is an entry point for analysis of many aspects of culture – something that Marino and Berry have discussed previously in critical code studies forums as iteracy (Berry, 2012, p. 8). And as previously learned in the 10 PRINT project, sometimes understanding code requires writing code or bringing in a seemingly unrelated study, such as literature or theatre studies. This is to say that the pleasure (and sometimes pain) of critical code studies grows from an engagement with a set of symbols that are true touchstones for exploration.

Our team has engaged in weekly conversations about the nature of the code, of ELIZA, its descendants, and how they all have circulated through culture. The act of critical code studies has proven not just a matter of assembling a team but of holding an ongoing problematic about the nature of the code. While some might imagine critical code studies to be worked out in a laboratory, our conversations have been more suited to the seminar room, the library, and sometimes a basement full of media artifacts. The arguments throughout are lively and sometimes contentious, the process of discovery and sharing completely unpredictable findings and ideas –this could be called the joy of critical code studies.

About the code:

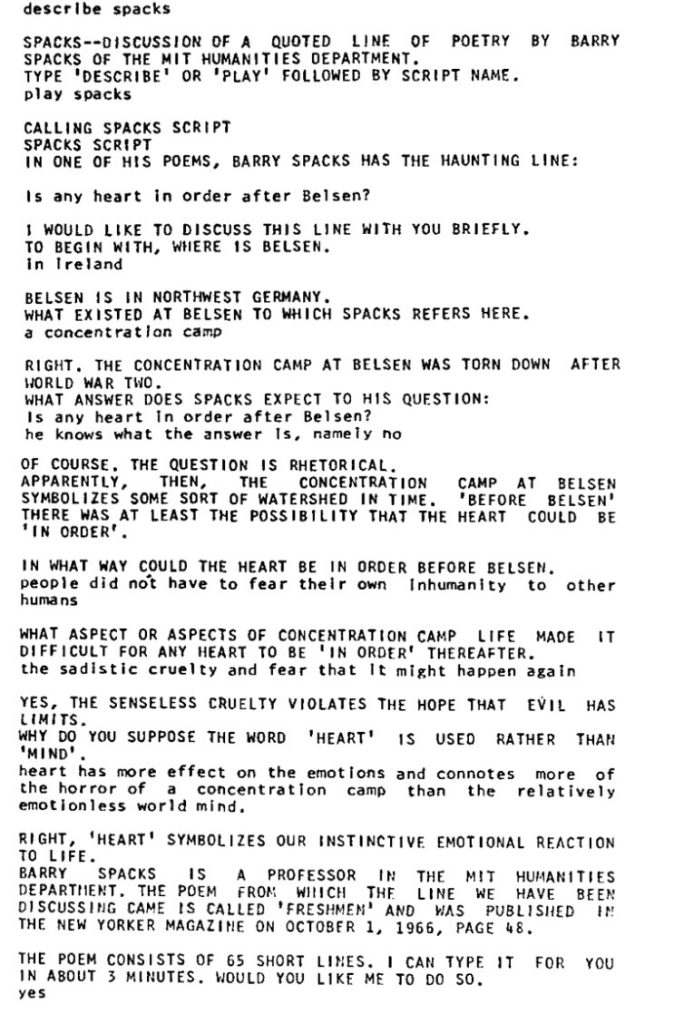

The two main files of source code in our investigation are ELIZA, the main software system, and DOCTOR, the script that runs the Rogerian psychotherapist on top of the ELIZA software. The DOCTOR script includes keywords for pattern-matching and as well as the rules for replies. However, it is the ELIZA system that makes these conversation scripts possible. Written in MAD-SLIP, the system could be used for a wide variety of scripts, and we have records of a number of alternatives that Weizenbaum and others developed, including ARITHM, GIRL, SPACKS, and SYNCTA. We also have documentation for the system being used to analyse poetry. Other programmers and educationalists adapted the ELIZA system for other teaching purposes. However, it was always the DOCTOR script that was most memorable.

One of the challenges of reading ELIZA is its archaic programming language. ELIZA was written on the IBM 7094 in a language called MAD. MAD (Michigan Algorithm Decoder) is a procedural language semantically similar to FORTRAN. Currently, there are very few programmers versant in MAD. Many assume that DOCTOR was written in LISP, largely due to Kenneth Colby’s LISP version; however, the program was actually written in MAD, calling SLIP subroutines, which our team has likened to contemporary methods of software development, allowing interaction between different software components through what are now called APIs. Like LISP, SLIP (Symmetric List Processing) performs operations on acyclic lists or S expressions.

Our research documents at least five key versions of the ELIZA code, although the convention of numbering versions was not common during the 1960s. For example, ELIZA 1965a delimits sentences with only “.” and “,” (evidence from MIT archive flowcharts). ELIZA 1965b: Delimits sentences with “.” and “,” and “but” and lacks the important NEWKEY function. It also includes an undocumented CHANGE function, and hard-coded messages. This is the version we uncovered in the MIT archives. Other versions include, ELIZA 1966, described in the CACM article which crucially includes the NEWKEY function, keyword stack and “But” delimiter, although the article interestingly omits to specify it.[2] We believe that ELIZA 1967 adds sophisticated script handling, as seen in evidence from descriptions in Weizenbaum (1967) and Taylor (1968) but also in the extant ARITHM, F29, FIGURE scripts we have discovered in the archive (Weizenbaum, 1976b). Finally, ELIZA 1968+, includes plans which we found described in Taylor (1968). However, the scripts SPACKS, INTRVW, and FVP1 also give evidence of much more sophisticated programming through the text of the scripts developed by Walter E. Daniels.

As we have been exploring the code, we have had our share of discoveries which we are documenting in a collaborative book manuscript (in the pattern of 10 PRINT) and we gladly share a few of the more notable observations. Perhaps most remarkable for its time is the inclusion of a CHANGE method. CHANGE allowed the ELIZA system to enter into edit mode, so the user could alter scripts while interfacing with it. In other words, a participant interacting with DOCTOR can type an asterisk and enter into an edit mode, make changes to the SCRIPT and then resume the conversation. With this clever contrivance, Weizenbaum turned ELIZA into a kind of platform for live editing and revision of the scripts, a kind of live authoring system that would have been unheard of at the time. This interactivity was a feature that most ELIZA/DOCTOR clones do not possess and whose programmers and later documenters probably did not even realize existed. However, we argue that this very contrivance is what makes ELIZA a conversational programming system, in which programming, or scripting, becomes enmeshed in the conversational exchange.

Other discoveries have emerged from close reading the transcripts against the code and scripts. While recreating the ELIZA/DOCTOR program, we have examined the transcript of the conversation with DOCTOR to make sure our version could replicate the exchange. That exploration clears up a long-standing error in the documentation. In the CACM article, Weizenbaum describes two delimiters to the end of phrases, period and comma (37). However, when analyzing the DOCTOR script in relation to the dialogue in the CACM article, we determined that the two delimiters “.” and “,” are insufficient to create the conversation. That exchange is only possible if “BUT” is also used to mark the end of a line for pattern matching. We could only discover this distinction through our methodical close reading of the code, the script, and the dialogue.

Not all of the discoveries have been in the code but rather through engagement with the code. As Anthony and Arthur have worked to recreate the ELIZA system, they have tested their DOCTOR scripts using the published dialogue, which is one of the ways we discovered the new BUT delimiter. However, in our treasure hunt through the Weizenbaum archives,we encountered not one but two dialogues submitted with the ACM article, the first being a rather pedestrian exchange and the second being the dramatic entanglement of gender politics that we know from the article. Furthermore, in the copies of that famous dialogue we discovered handwritten edits that suggested Weizenbaum the editor, not just presenting computer output but working to hone the text for re-presentation to an audience. We are not suggesting that he manufactured the dialogue, but we believe that he used the transcript to tweak the system for more resonant dialogue. In this way, the paratexts have helped us witness the process of the code’s development. Of course, whether or not Weizenbaum wrote every line of the ELIZA source code is also still an open question.

Through our examination of his code, we have found ourselves developing our understanding of Joseph Weizenbaum. This has involved trying to reconcile his many facets. A deeply moral man, Weizenbaum can be called the first voice of opposition to the unrestrained rush toward artificial intelligence. Weizenbaum was at once the programmer who created the system that drew interactors into long personal exchanges and the critic who railed against its potential use as a profitable computer therapist. He would certainly have much to say to and about AI developments today. Having fled the Holocaust as a child, he understood the dehumanizing effects of technology, but when he stood up against the misuse of artificial intelligence through his book Computer Power and Human Reason, he was ostracized by his academic community (Weizenbaum, 1976; ben-Aaron, 1985). In our exploration of his archives, we found evidence of that moral man and the impact of his ostracization following his strong criticisms of AI, as he became isolated in his department at MIT and his colleagues treated him as a heretic to his field. In our exploration of his paperwork, we found his application for his leave to go study humanities at Stanford, from which he returned quoting Hannah Arendt about our militaristic inhumanity. In our journey through his source code, we have found a deeper encounter with Weizenbaum’s humanity, both his intellect and his heart.

As we continue to research ELIZA and its legacy, we want to mark this project as a sign of the vitality of critical code studies and the extent to which it has evolved since the original essay by Marino in ebr (Marino, 2006a). Exploring the code of ELIZA with the tools of critical code studies in this deeply archaeological manner has uncovered an historical moment when computational innovations were racing side-by-side with an unchecked rush toward artificial intelligence, one not unlike our own. With additional reading tools, the assistance of LLMs, increased inclusion and diversification of the field, and the development of new methods and approaches, we argue that critical code studies offers innovative opportunities to understand and discuss our cultures through the entry point of the powerful symbols of code.

Bibliography

ben-Aaron, D. (1985) ‘Weizenbaum examines computers and society’, The Tech, p. 1.

Berry, D.M. (ed.) (2012) Understanding digital humanities. Houndmills, Basingstoke, Hampshire ; New York: Palgrave Macmillan.

Berry, D.M. et al. (2023) ‘Finding ELIZA - Rediscovering Weizenbaum’s Source Code, Comments and Faksimiles’, in M. Baranovskaa and S. Höltgen (eds) Hello, I’m Eliza. 50 Jahre Gespräche mit Computern. 2nd edn. Bochum: Projektverlag, pp. 247–248. Available at: https://www.projektverlag.de/Hello_I_am-Eliza.

Hayles, N.K. (2002) Writing Machines. Cambridge, Mass: MIT Press.

Manovich, L., Malina, R.F. and Cubitt, S. (2002) The Language of New Media. New Ed edition. Cambridge, Mass.: MIT Press.

Marino, M.C. (2006a) ‘Critical Code Studies’, Electronic Book Review [Preprint]. Available at: https://electronicbookreview.com/essay/critical-code-studies/ (Accessed: 21 February 2024).

Marino, M.C. (2020) Critical code studies. Cambridge, Massachusetts: The MIT Press (Software studies).

Marino, M. C., Diana Leong, and Jessica Pressman. “Entanglements.” The Digital Review, no. 2 (September 2022). https://thedigitalreview.com/issue02/marino_entanglements/index.html.

Marino, M. C. (2006b). I, Chatbot: The Gender and Race Performativity of Conversational Agents [PhD Thesis]. University of California, Riverside.

Marino, M.C. and Douglass, J. (2023) ‘Introduction: Situating Critical Code Studies in the Digital Humanities’, Digital Humanities Quarterly, 017(2).

Marino, M. C., Leong, D., & Pressman, J. (2022). Entanglements. The Digital Review, 2. https://thedigitalreview.com/issue02/marino_entanglements/index.html

Montfort, N. et al. (2013) 10 PRINT CHR$(205.5+RND(1)); : GOTO 10. The MIT Press.

Murray, J.H. (1998) Hamlet on the holodeck: the future of narrative in cyberspace. Cambridge, Massachusetts: MIT Press.

North, S. (1977, August). Psychoanalysis(?), by Computer… Creative Computing, 3(4), 100–103.

Pressman, Jessica, Mark C. Marino, and Jeremy Douglass. Reading Project: A Collaborative Analysis of William Poundstone’s Project for Tachistoscope {Bottomless Pit}. 1 edition. Iowa City: University Of Iowa Press, 2015.

Shrager (2023) ELIZA in BASIC. In M Baranovska-Bölter, S Höltgen (Eds.) Hello, I’m ELIZA. 95-102.

Turkle, S. (1997) Life on the screen: identity in the age of the Internet. New York: Touchstone.

Wardrip-Fruin, N. (2009) Expressive Processing: Digital Fictions, Computer Games, and Software Studies. Cambridge, Mass: MIT Press.

Weizenbaum, J. (1966) ‘ELIZA - A Computer Program For the Study of Natural Language Communication Between Man And Machine’, Communications of the ACM, 9(1), pp. 36–45.

Weizenbaum, J. (1976) Computer power and human reason: from judgment to calculation. San Francisco: W. H. Freeman and Company.