Tech-TOC: Complex Temporalities in Living and Technical Beings

Katherine Hayles uses Steve Tomasula's multimodal TOC for a significant engagement with the temporal processuality of complex technical beings. Drawing on Bergon's "duration" and its elaboration in recent theories of technicity and consciousness, Hayles explores the complex temporal enfoldings of living and technical beings, showing that Tomasula's new media novel narrates and materially embodies such assemblages.

At least since Henri Bergson’s (2005, 1913) concept of Duration, a strong distinction has been drawn between temporality as process (according to Bergson, unextended, heterogeneous time at once multiplicitous and unified, graspable only through intuition and human experience) and temporality as measured (homogenous, spatialized, objective and “scientific” time). Its contributions to the history of philosophy notwithstanding, the distinction has a serious disadvantage: although objects, like living beings, exist within Duration, there remains a qualitative distinction between the human capacity to grasp Duration and the relations of objects to it. Indeed, there can be no account of how Duration is experienced by objects, for lacking intuition, they may manifest Duration but not experience it. What would it mean to talk about an object’s experience of time, and what implications would flow from this view of objecthood?

Exploring these questions requires an approach that can recognize the complexity of temporality with regard to technical objects. This would then open the door to a series of provocative questions. How is the time of objects constructed as complex temporality? What human cognitive processes participate in this construction? How has the complex temporality of objects and humans co-constituted one another through evolutionary processes? Along what time scales do interactions occur between humans and technical objects, specifically networked and programmable machines? What are the implications of concatenating processual and measured time together in the context of digital technologies? What artistic and literary strategies explore this concatenation, and how does their mediation through networked and programmable machines affect the concatenation?

A promising line of inquiry runs through evolution. Evolution has transformative change as its center; it also aims at explanatory patterns that are manifest locally (through individuals) and globally (through populations), making possible multi-scale analyses. Nearly half a century ago, the French “mechanologist” Gilbert Simondon (2001, 1958) proposed an evolutionary explanation for the regularities underlying transformations of what he called “technical beings.” Simondon’s analyses remain useful primarily because of his focus on general principles combined with detailed expositions of how they apply to specific technologies. Working through Simondon’s concepts as well as their elaboration by such contemporary theorists as Adrian MacKenzie, I will discuss the view that technical objects embody complex temporalities enfolding past into present, present into future. An essential component of this approach is a shift from seeing technical objects as static entities to conceptualizing them as temporary coalescences in fields of conflicting and cooperating forces. While hints of Bergsonian Duration linger here, the mechanologist perspective starts from a consideration of the nature of technical objects rather than from the nature of human experience.

The object-centered view of technological change will then be related to human evolution through the theory that humans and tools have co-evolved together (technogenesis). In the fabrication of tools and the associated co-evolution of human biology and cognition, attention plays a special role, for it focuses on the detachment and re-integration of technical elements that Simondon argues is the essential mechanism for technological change. Its specialness notwithstanding, attention is nevertheless a limited cognitive faculty whose boundaries are difficult to see because it is at the center of consciousness, whereas unconscious and nonconscious faculties remain partially occluded, despite being as (or more) important in interactions with technological environments. Human cognition as a whole (including attentive focus, unconscious perceptions, and nonconscious cognitions) is in dynamic interplay with the tools it helps to bring into being, in a feedback process that Andy Clark calls continuous reciprocal causation (CRC; Clark, 2008, p. 24 ).

While a mechanologist perspective, combined with technogenesis, provides an explanatory framework within which complex temporalities can be seen to inhabit both living and technical beings, the pervasive human experience of time as measured and spatialized cannot be so easily dismissed. To explore the ways in which Duration and spatialized temporality create fields of contention seminal to human cultures, I will turn to an analysis of Steve Tomasula ‘s TOC: A New Media Novel (2009), a multimodal electronic novel. Marked by aesthetic ruptures, discontinuous narratives and diverse modalities including video, textual fragments, animated graphics and sound, TOC creates a rich assemblage in which the conflicts between measured and experienced time are related to the invention, development and domination of the Influencing Engine, a metaphoric allusion to computational technologies. Composed on computers and played on them. TOC explores its conditions of possibility in ways that perform as well as demonstrate the interpenetration of living and technical beings, processes in which complex temporalities play central roles.

Technics and Complex Temporalities

Technics, in Simondon’s view, is the study of how technical objects emerge, solidify, disassemble, and evolve. In his exposition, technical objects are comprised of three different categories: technical elements, for example, the head of a stone axe; technical individuals, for example, the compound tool formed by the head, bindings and shaft of a stone axe; and technical ensembles, in this case, the flint piece used to knap the stone head, the fabrication of the bindings from animal material, and the tools used to shape the wood shaft, as well as the toolmaker who crafts this compound tool. The technical ensemble gestures toward the larger social/technical processes through which fabrication comes about; for example, the toolmaker is himself embedded in a society in which the knowledge of how to make stone axes is preserved, transmitted, and developed further. Obviously, it is difficult to establish clear-cut boundaries between a technical ensemble and the society that creates it, leading to Bruno Latour’s observation that “tools are the extension of social relations to the non-human.” Whereas the technical ensemble points toward complex social structures, the technical element shows how technological change can come about by being detached from its original ensemble and embedded in another, for example, when the stone axe head becomes the inspiration for a stone arrowhead. The ability of technical elements to “travel” is, in Simondon’s view, a principal factor in technological change.

The motive force for technological change is the increasing tendency toward what Simondon calls “concretization,” innovations that resolve conflicting requirements within the milieu in which a technical individual operates. Slightly modifying his example of an adze, I illustrate through the conflicting requirements in the case of the metal head of an axe. The axe blade must be hard enough so that when it is driven into a piece of cord wood, for example, it will cut the wood without breaking. The hole through which the axe handle goes, however, has to be flexible enough so that it can withstand the force transmitted from the blade without cracking. The conflicting requirements for flexibility and rigidity are negotiated through the process of tempering, in which the blade is hammered so that the crystal structure is changed, giving it the necessary strength, while the thicker part of the head retains its original properties.

Typically, when a technical individual is in early stages of development, conflicting requirements are handled in an ad hoc way, for example in the separate system used in a water-cooled internal combustion engine that involves a water pump, radiator, tubing, etc. When problems are solved in an ad hoc fashion, the technical individual is said to be abstract, in the sense that it instantiates separate bodies of knowledge without integrating them into a unitary operation. In an air-cooled engine, by contrast, the cooling becomes an intrinsic part of the engine’s operation; if the engine operates, the cooling necessarily takes place. As an example of increasing concretization, Simondon instances exterior ribs that serve both to cool a cylinder head and simultaneously provide structural support so that the head will remain rigid during repeated explosions and impacts. Concretization, then, is at the opposite end of the spectrum from abstraction, for it integrates conflicting requirements into multi-purpose solutions that enfold them together into intrinsic and necessary circular causalities. Simondon correlates the amount of concretization of a technical individual with its technicity; the greater the degree of concretization, the greater the technicity.

The conflicting requirements of a technical individual that is substantially abstract constitute, in Simondon’s view, a potential for innovation, or in other words, a repository of virtuality that invites transformation. Technical individuals represent, in Adrian Mackenzie’s (2002, p. 17 ff.) phrase, meta-stabilities, that is, provisional solutions of problems whose underlying dynamics push the technical object toward further evolution. Detaching a technical element from one individual—for example, a transistor from a computer—and embedding it in a new technical ensemble or individual—for example, importing it into a radio, cell phone, or microwave—requires solutions that move the new milieu toward greater concretization. These solutions sometimes feed back into the original milieu of the computer to change its functioning as well. In this view, technical objects are always on the move toward new configurations, new milieu, and new kinds of technical ensembles. Temporality is something that not only happens within them but also is carried by them in a constant dance of temporary stabilizations amid continuing innovations.

This dynamic has implications for the “folding of time,” a phenomenon Bruno Latour (1994) identifies as crucial to understanding technological change. Technical ensembles, as we have seen, create technical individuals; they are also called into existence by technical individuals. The automobile, for example, called a national network of paved roads into existence; it in turn was called into existence by the concentration of manufacture in factories, along with legal regulations that required factories to be located in separate zones from housing. In this way, the future is already pre-adopted in the present (future roads in present cars), while the present carries along with it the marks of the past, for example in the metal axe head that carries in its edge the imprint of the technical ensemble that tempered it (in older eras, this would include a blacksmith, forge, hammer, anvil, bucket of water, etc.). On a smaller scale, the past is enfolded into the present through skeuomorphs, details that were previously functional but have lost their functionality in a new technical ensemble. The “stitching” (actually an impressed pattern) on my Honda Accord vinyl dashboard covering, for example, recalls the older leather coverings that were indeed stitched. So pervasive are skeuomorphs that once one starts looking from them, they appear to be everywhere - and why, if not for the human need to carry the past into the present? These enfoldings-–past nestling inside present, present carrying the embryo of the future - constitute the complex temporalities that inhabit technics. When devices are created that make these enfoldings explicit, for example in the audio and video recording devices that Bernard Stiegler (1998) discusses, the biological capacity for memory (which can be seen as an evolutionary adaptation to carry the past into the present) is exteriorized, creating the possibility, through technics, for a person to experience through complex temporality something that never was experienced as a first-hand event, a possibility that Stiegler calls tertiary retention. This example, which Stiegler develops at length and to which he gives theoretical priority, should not cause us to lose sight of the more general proposition: that all technics imply, instantiate, and evolve through complex temporalities.

The Co-Evolution of Humans and Tools

Simondon distinguishes between a technical object and a [mere] tool by noting that technical objects are always embedded within larger networks of technical ensembles, including geographic, social, technological, political and economic forces. For my part, I find it difficult to imagine a tool, however humble, that does not have this characteristic. Accordingly, I depart from Simondon by considering tools as part of technics, and indeed an especially important category because of their capacity for catalyzing exponential change. Anthropologists usefully define a tool as an artifact used to make other artifacts. The definition makes clear that not everything qualifies as a tool: houses, cars, and clothing are not normally used to make other artifacts, for example. One might suppose that the first tools were necessarily simple in construction, because by definition, there were no other tools to aid in their fabrication. In the new millennium, by contrast, the technological infrastructure of tools that make other artifacts is highly developed, including laser cutters, 3-D printers, and the like, as well as an array of garden-variety tools that populate the garages of many US households, including power saws, nail hammers, cordless drills, etc. Arguably, the most flexible, pervasive, and useful tools in the contemporary era are networked and programmable machines. Not only are they incorporated into an enormous range of other tools, from computerized sewing machines to construction cranes, but they also produce informational artifacts as diverse as databases and Flash poems.

The proposition that humans coevolved with the development and transport of tools is not considered especially controversial among anthropologists. For example, the view is that bi-pedalism co-evolved with tool manufacture and transport is widely accepted. Walking on two legs freed the hands, and the resulting facility with tools bestowed such strong adaptive advantage that the development of bi-pedalism was further accelerated, in a recursive and upward spiral of continuous reciprocal causation. So too with the opposable thumb, for much the same reasons: finer motor control was especially useful in making and manipulating artifacts, for example with weapons such as the spear and bow and arrow.

The constructive role of tools in human evolution involves cognitive as well as muscular and skeletal changes. Stanley Ambrose (2001), for example, has linked the fabrication of compound tools (tools with more than one part, such as a stone ax) to the rapid increase in Broca’s area in the brain and the consequent expansion and development of language. According to his argument, compound tools involve specific sequences for their construction, a type of reasoning associated with Broca’s area, which also is instrumental in language use, including the sequencing associated with syntax and grammar. Tool fabrication in this view resulted in cognitive changes that facilitated the capacity for language, which in turn further catalyzed the development of more (and more sophisticated) compound tools.

Such changes are well-documented by paleoanthropologists working with evolutionary time scales, requiring hundreds of generations and thousands of years to develop and proliferate through a population. What about changes that occur over much shorter time spans, for example, the six decades from 1950 to the present? To explore the possibility for technogenesis in this time frame, it is necessary to re-think the relation of technics and human cognition in different terms. We may begin by bringing to the fore a cognitive faculty under-theorized within the discourse of technics: the constructive role of attention in fabricating tools and creating technical ensembles. To develop this idea, I distinguish between materiality and physicality. The physical attributes of any object are essentially infinite (indeed, this inexhaustible repository is responsible for the virtuality of technical objects). As I discussed in My Mother Was a Computer: Digital Subjects and Literary Texts (2005), a computer can be analyzed through any number of attributes, from the rare metals in the screen to the polymers in the case to the copper wires in the power cord, and so on ad infinitum. Consequently, physical attributes are necessary but not sufficient to account for technical innovation. What counts is rather the materiality of the object. Materiality comes into existence, I argue, when attention fuses with physicality to identify and isolate some particular attribute (or attributes) of interest.

Materiality, then, is unlike physicality in being an emergent property. It cannot be specified in advance, as though it existed ontologically as a discrete entity. Requiring acts of human attentive focus on physical properties, materiality is a human-technical hybrid. Matthew Kirschenbaum’s definitions of materiality in Mechanisms: New Media and the Forensic Imagination (2008) may be taken as examples illustrating this point. Kirschenbaum distinguishes between forensic and formal materiality. Forensic materiality, as the name implies, consists in minute examinations of physical evidence to determine the traces of information in a physical substrate, as when, for example, he takes a computer disk to a nanotech laboratory to “see” the bit patterns (with a non-optical microscope). Here attention is focused on determining one set of attributes, and it is this fusion that allows Kirschenbaum to detect (or more properly, construct) the materiality of the bit pattern. Kirschenbaum’s other category, formal materiality, can be understood through the example of the logical structures in a software program. Formal materiality, like forensic materiality, must be embodied in a physical substrate to exist (whether as a flow chart scribbled on paper, as a hierarchy of coding instructions written into binary within the machine, or the firing of neurons in the brain), but the focus now is on the form or structure rather than physical attributes. In both cases, forensic and formal, the emergence of materiality is inextricably bound up with the acts of attention. Attention also participates in the identification, isolation, and modification of technical elements that play a central role in the evolution of technical objects.

At the same time, other embodied cognitive faculties also participate in this process. Andy Pickering’s (1995) description of the “mangle of practice” illustrates. Drawing on my own experience with throwing pots, I typically would begin with a conscious idea: the shape to be crafted, the size, texture and glaze, etc. Wedging the clay gives other cognitions a change to work as I absorb through my hands information about the clay’s graininess, moisture content, chemical composition, etc., which may perhaps cause me to modify my original idea. Even more dynamic is working the clay on the wheel, a complex interaction between what I envision and what the clay has a mind to do. A successful pot emerges when these interactions become a fluid dance, with the clay and my hands coming to rest at the same moment. In this process, embodied cognitions of many kinds participate, including unconscious and nonconscious ones running throughout my body and through the rhythmic kicking of my foot, extending into the wheel. That these capacities should legitimately be considered a part of cognition is eloquently argued by Antonio Damasio (2005), supplemented by the work of Oliver Sacks (1198) on patients who have suffered neurological damage and so have, for example, lost the capacity for memory, proprioception, or emotion. As these researchers demonstrate, losing these capacities results in cognitive deficits so profound that they prevent the patients from living anything like a normal life. Conversely, normal life requires embodied cognition that exceeds the boundaries of consciousness and indeed of the body itself.

Embedded cognition is closely related to extended cognition, with different emphasis and orientation that nevertheless are significant. An embedded cognitive approach, typified by the work of anthropologist Edwin Hutchins (1996), emphasizes the environment as crucial scaffolding and support for human cognition. Analyzing what happened when a Navy vessel lost steam power and then electric power as it entered San Diego harbor and consequently lost navigational capability, Hutchins observed the emergence of self-organizing cognitive systems in which people made use of the devices to hand (a ruler, paper, and protractor, for example) to make navigational calculations. No one planned in advance how these systems would operate, because there was no time to do so; rather, folks under extreme pressure simply did what made sense in that environment, adapting to each other’s decisions and to the environment in flexible yet coordinated fashion. In this instance, and many others that Hutchins analyzes, people make use of objects in the environment, including their spatial placements, colors, shapes and other attributes, to support and extend memory, combine ideas into novel syntheses, and in general enable more sophisticated thoughts than would otherwise be possible.

Andy Clark illustrates this potential with a story (quoted from James Gleick, 1993, p. 409) about Richard Feynman, the Nobel Prize-winning physicist, meeting with the historian Charles Weiner to discuss a batch of Feynman’s original notes. Weiner remarks that the papers are “a record of [Feynman’s] day-to-day work,” but Feynman disagrees.

“I actually did the work on the paper,” he said.

“Well,” Weiner said, “the work was done in your head, but the record of it is still here.”

“No, It’s not a record, not really. It’s working. You have to work on paper and this is the paper. Okay?” (Clark, p. xxv).

Feynman makes clear that he did not have the ideas in advance and wrote them down. Rather, the process of writing-down was an integral part of his thinking, and the paper and pencil were as much a part of his cognitive system as the neurons firing in his brain. Working from such instances, Clark develops the model of extended cognition (which he calls Extended), contrasting it with a model that imagines cognition happens only in the brain (which he calls Brainbound). The differences between Extended and Brainbound are clear, with the evidence overwhelmingly favoring the former over the latter.

More subtle are the differences between the embedded and extended models. Whereas the embedded approach emphasizes human cognition at the center of self-organizing systems that support it, the extended model tends to place the emphasis on the cognitive system as a whole and its enrollment of human cognition as a part of it. Notice, for example, the place of human cognition in this passage from Clark: “We should consider the possibility of a vast parallel coalition of more or less influential forces, whose largely self-organizing unfolding makes each of us the thinking beings we are” (Clark, 2008, pp. 131-2). Here human agency is downplayed, and the agencies of “influential forces” seem primary. In other passages, human agency comes more to the fore, as in the following: “goals are achieved by making the most of reliable sources of relevant order in the bodily or worldly environment of the controller” (2008, pp. 5-6). On the whole, however, it is safe to say that human agency becomes less a “controller” in Clark’s model of extended cognition compared to embedded cognition and more of a player amongst many “influential forces” that form flexible, self-organizing systems of which it is a part. It is not surprising that there should be ambiguities in Clark’s analyses, for the underlying issues involve the very complex dynamics between deeply layered technological built environments and human agency in both its conscious and unconscious manifestations. Recent work across a range of fields interested in this relation - neuroscience, psychology, cognitive science and others - indicates that the unconscious plays a much larger role than had previously been thought in determining goals, setting priorities, and other activities normally associated with consciousness. The “new unconscious,” as it is called, responds in flexible and sophisticated ways to the environment while remaining inaccessible to consciousness, a conclusion supported by a wealth of experimental and empirical evidence.

For example, John A. Bargh (2005) reviews research in “behavior-concept priming.” In one study, university students were presented with what they were told was a language test; without their knowledge, one of the word lists was seeded with synonyms for rudeness, the other with synonyms for politeness. After the test, participants exited via a hallway in which there was a staged situation, to which they could react either rudely or politely. The preponderance of those that saw the rude words acted rudely, while those who saw the polite words acted politely. A similar effect has been found in activating stereotypes. Bargh writes, “subtly activating (priming) the professor stereotype in a prior context causes people to score higher on a knowledge quiz, and priming the elderly stereotype makes college students not only walk more slowly but have poorer incidental memory as well” (2005, p. 39). Such research demonstrates “the existence of sophisticated nonconscious monitoring and control systems that can guide behavior over extended periods of time in a changing environment, in pursuit of desired goals” (2005, p. 43). In brain functioning, this implies that “conscious intention and behavioral (motor) systems are fundamentally dissociated in the brain. In other words, the evidence shows that much if not most of the workings of the motor systems that guide action are opaque to conscious access” (2005, p. 43).

To explain how nonconscious actions can pursue complex goals over an extended period of time, Bargh instances research indicating that working memory (that portion of memory typically supposed to be immediately accessible to consciousness, often called the brain’s “scratch pad”) is not a single unitary structure but rather has multiple components. He summarizes, “the finding that within working memory, representations of one’s intentions (accessible to conscious awareness) are stored in a different location and structure from the representations used to guide action (not accessible) is of paramount importance to an understanding of the mechanisms underlying priming effects in social psychology” (p. 47), providing “the neural basis for nonconscious goal pursuit and other forms of unintended behavior” (p. 48). In this view, the brain remembers in part through the action-circuits within working memory, but these memories remain beyond the reach of conscious awareness.

Given the complexity of actions that people can carry out without conscious awareness, the question arises of what is the role of consciousness, in particular, what evolutionary driver vaulted primate brains into self-awareness. Bargh suggests that metacognitive awareness, being aware of what is happening in the environment as well as one’s thoughts, allows the coordination of the different mental states and activities to get them all working together. “Metacognitive consciousness is the workplace where one can assemble and combine the various components of complex perceptual-motor skills” (p. 53). Quoting Merlin Donald (2001), he emphasizes the great advantage this gives humans; “‘whereas most other species depend on their built-in demons to do their mental work for them, we can build our own demons’” (p. 53; Donald, 2001, p. 8; italics in original).

Nevertheless, many people resist the notion that nonconscious and unconscious actions may be powerful sources of cognition, no doubt because it brings human agency into question. This is a mistake, argues Ap Dijksterhuis, Henk Aarts, and Pamela K. Smith (2005). In a startling reversal of Descartes, they propose that thought itself is mostly unconscious. “Thinking about the article we want to write is an unconscious affair,” they claim. “We read and talk, but only to acquire the necessary materials for our unconscious mechanisms to chew on. We are consciously aware of some of the products of thought that sometimes intrude into consciousness … but not of the thinking—the chewing—itself” (p. 82). They illustrate their claim that “we should be happy that thought is unconscious” with statistics about human processing capacity. The senses can handle “about 11 million bits per second,” with about 10 million bits per second coming from the visual system. Consciousness, by contrast, can handle dramatically fewer bits per second. Silent reading processes take place at about 45 bits per second; reading aloud slows the rate to 30 bits per second. Multiplication proceeds at 12 bits per second. Thus they estimate that “our total capacity is 200,000 times as high as the capacity of consciousness. In other words, consciousness can only deal with a very small percentage of all incoming information. All the rest is processed without awareness. Let’s be grateful that unconscious mechanisms help out whenever there is a real job to be done, such as thinking” (p. 82).

With unconscious and nonconscious motor processes assuming expanded roles in these views, we can now trace the cycles of continuous reciprocal causality that connect embodied cognition with the technological infrastructure, i.e., the built environment. Nigel Thrift (2004) argues that contemporary technical infrastructures, especially networked and programmable machines, are catalyzing a shift in the technological unconscious, that is, the actions, expectations and anticipations that have become so habitual they are “automatized,” sinking below conscious awareness while still being integrated into bodily routines carried on without conscious awareness. Chief among them are changed constructions of space (and therefore changed sequences of temporality), based on “track-and-trace” models. With such technologies as bar codes, SIM cards in mobile phones, and RFID (Radio Frequency Identification) tags, human and nonhuman actants become subject to hypercoordination and microcoordination (see Hayles 2009 for a discussion of RFID). Both time and space are divided into smaller and smaller intervals and coordinated with locations and mobile addresses of products and people, resulting in “a new kind of phenomenality of position and juxtaposition” (Thrift, 2004, p. 186). The result, Thrift suggests, is “a background sense of highly complex systems simulating life because, in a self-fulfilling prophecy … highly complex systems (of communication, logistics, and so on) do structure life and increasingly do so adaptively” (p. 186). Consequently, mobility and universally coordinated time subtly shift what is seen as human. “The new phenomenality is beginning to structure what is human,” Thrift writes, “by disclosing ‘embodied’ capacities of communication, memory, and collaborative reach in particular ways that privilege a roving engaged interaction as typical of ‘human’ cognition and feed that conception back into the informational devices and environments that increasingly surround us” (p. 186).

Although these changes are now accelerating at unprecedented speeds, they have antecedents well before the twentieth century. Wolfgang Shivelbush (1987), for example, discusses them in the context of the railway journey, when passengers encountered landscapes moving faster than they have ever appeared to move before. He suggests that the common practice of reading a book on a railway journey was a strategy to cope with this disorienting change, for it allowed the passenger to focus on a stable nearby object that remained relatively stationary, thus reducing the anxiety of watching what was happening out the window. Over time, passengers came to regard the rapidly moving scenery as commonplace, an indication that the mechanisms of attention had become habituated to faster moving stimuli. An analogous change happened in films between about 1970 and the present. Film directors accept as common wisdom that the time it takes for an audience to absorb and process an image has decreased dramatically as jump cuts, flashing images and increased paces of image projection have conditioned audiences to recognize and respond to images faster than was previously the case. My colleague Rita Raley tells of showing the film Parallax View and having her students find it unintentionally funny, because the supposedly subliminal images that the film flashes occur so slowly (to them) that it seems incredible anyone could ever have considered them as occurring at the threshold of consciousness. Steven Johnson (2006), in Everything Bad is Good for You, notes a phenomenon similar to faster image processing when he analyzes the intertwining plot lines of popular films and television shows to demonstrate that narrative development in these popular genres has become much more complicated in the last four decades, with shorter sequences and many more plot lines. These developments hint at a dynamic interplay between the kinds of environmental stimuli created in information-intensive environments and the adaptive potential of cognitive faculties in concert with them. Moreover, the changes in the environment and cognition follow similar trajectories, toward faster paces, increased complications, and accelerating interplays between selective attention, the unconscious, and the technological infrastructure.

It may be helpful at this point to recapitulate how this dynamic works. The “new unconscious”, also called the “adaptive unconscious” by Timothy Wilson (2002), a phrase that seems to me more appropriate, creates the background that participates in guiding and directing selective attention. Because the adaptive unconscious interacts flexibly and dynamically with the environment (i.e., through the technological unconscious), there is a mediated relationship between attention and the environment much broader and more inclusive than focused attention itself allows. A change in the environment results in a change in the technological unconscious and consequently in the background provided by the adaptive unconscious, and that in turn creates the possibility for a change in the content of attention. The interplay goes deeper than this, however, for the mechanisms of attention themselves mutate in response to environmental conditions. Whenever dramatic and deep changes occur in the environment, attention begins to operate in new ways.

Andy Clark (2008) gestures toward such changes when, from the field of environmental change, he focuses on particular kinds of interactions that he calls “epistemic actions,” which are “actions designed to change the input to an agent’s information-processing system. They are ways an agent has of modifying the environment to provide crucial bits of information just when they are needed most” (2008, p. 38). I alter Clark’s formulation slightly so that epistemic actions, as I use the term, are understood to modify both the environment and cognitive-embodied processes that adapt to make use of those changes. Among the epistemic changes in the last fifty years in developed countries, such as the US, are dramatic increases in the use and pacing of media, including the Web, television and films; networked and programmable machines that extend into the environment, including PDAs, cell phones, GPA devices and other mobile technologies; and the interconnection, data scraping, and accessibility of databases through a wide variety of increasing powerful desktop machines as well as such ubiquitous technologies such as RFID tags, often coupled autonomously with sensors and actuators. In short, the variety, pervasiveness and intensity of information streams have brought about major changes in built environments in the US and comparably developed societies in the last half century. We would expect, then, that conscious mechanisms of attention and the mechanisms undergirding the adaptive unconscious have changed as well. Catalyzing these changes have been new kinds of embodied experiences (virtual reality and mixed reality, for example), new kinds of cognitive scaffolding (computer keyboards, multi-touch affordances in Windows 7), and new kinds of extended cognitive systems (cell phones, video games, multi-player role games and persistent reality environments such as Second Life, etc.).

As I have argued elsewhere (Hayles, 2007), these environment changes have catalyzed changes in the mechanisms of selective attention. In brief, these changes may be represented as a shift from the deep attention end of the spectrum toward the hyper attention end. Deep attention is characterized by a preference for a single information stream, obliviousness to outside environmental distractions, and a desire to immerse oneself in a single object for extended periods of time, for example a Thackeray novel. Hyper attention is characterized by preference for multiple information streams, flexibility in rapidly switching between information streams, sensitivity to environmental stimuli, and a low threshold for boredom, typified, for example, by a video game player. I further argued that we are in the midst of a generational shift in cognitive modes; the younger the cohort, the more likely they are to incline toward hyper rather than deep attention. Space precludes a full development of the evidence for this hypothesis here. Suffice it to say that anecdotal evidence from colleagues at many colleges and universities across the US, from small liberal arts colleges to Research 1 universities, indicate that teachers in higher education are already seeing evidence of this shift. “I can’t get my students to read long novels any longer,” one colleague explained, “so I’ve taken to assigning short stories.” Another reported that “My students won’t read whole books, so now I excerpt chapters as assignments.”

If we look for the causes of this shift, media seem to play a major role. Empirical studies such as the “Generation M” report in 2005 by the Kaiser Family Foundation indicate that young people (ages 8 to 18 in their survey) spend, on average, an astonishing six hours per days consuming some form of media, and often multiple forms at once (surfing the Web while listening to an IPod, for example, or IMing their friends). Moreover, media consumption by young people in the home has shifted from the living room to their bedrooms, a move that facilitates consuming multiple forms of media at once. Going along with the shift is a general increase in information intensity, with more and more information available with less and less effort.

Although deep attention seems at first glance preferable to hyper attention (especially to academics), in some situations hyper attention is more adaptive, for example in the case of an air traffic controller. It is far too simplistic to say that hyper attention represents a cognitive deficit or a decline in cognitive ability among young people (see Bauerlein (2009) for an egregious example of this). On the contrary, hyper attention can be seen as a positive adaptation that makes young people better suited to live in the information-intensive environments that are becoming ever more pervasive. That being said, I think that deep attention is a precious social achievement that took centuries, even millennia, to cultivate, facilitated by the spread of libraries, better K-12 schools, more access to college and universities, and so forth. Indeed, certain complex tasks can be accomplished only with deep attention: working through a mathematical theorem, grasping the movement of a Bach quartet, understanding a difficult poem. It is a heritage we cannot afford to lose. I will not discuss further here why I think the shift toward hyper attention constitutes a crisis in pedagogy for our colleges and universities, or what strategies might be effective in guiding young people toward deep attention while still recognizing the strengths of hyper attention. Instead, I want now to explore the implications of the shift for contemporary technogenesis.

Neurologists have known for some time that an infant, at birth, has more synapses (connections between neurons) than she or he will ever have again in life (see Bear et al., 2006). Through a process known as synaptogenesis, synapses are pruned in response to environmental stimuli, with those that are used strengthening and the neural clusters with which they are associated spreading, while the synapses that are not used wither and die. Far from cause for alarm, synaptogenesis can be seen as a marvelous evolutionary adaptation, for it enables every human brain to be re-engineered from birth on to fit into his or her environment. Although greatest during the first two years of life, this process (and neural plasticity in general) continues throughout childhood and into adulthood. The clear implication is that children who grow up in information-intensive environments will literally have brains wired differently than children who grow up in other kinds of cultures and situations. The shift toward hyper attention is an indication of the direction in which contemporary neural plasticity is moving. It is not surprising that it should be particularly noticeable in young people, becoming more pronounced the younger the child, down at least to ages three or four, when the executive function that determines how attention works is still in formation.

As we have seen, evolutionary technogenesis or “originary technicity,” as it is sometimes called, postulates that humans as a species co-evolved with the development, transport and use of tools, requiring evolutionary time scales in which to work. Synaptogenesis, however, is not conveyed through changes in the DNA but rather through epigenetic (i. e., environmental) changes. The relation between epigenetic and genetic changes has been a rich field of research in recent decades, resulting in a much more nuanced (and accurate) picture of biological adaptations than was previously the case, when the central dogma had all adaptations occurring through genetic processes. A pioneer in epigenetic research was Mark James Baldwin (1896), who proposed in the late nineteenth century an important modification to Darwinian natural selection. Baldwin suggested that a feedback loop operates between genetic and epigenetic change, when populations of individuals that have undergone genetic mutation modify their environment so as to favor that adaptation. There are many examples in different species of this kind of effect.

In the contemporary period, when epigenetic changes are moving young people toward hyper attention, a modified Baldwin effect may be noted (modified because the feedback loop here does not run between genetic mutation and environmental modification, as Baldwin suggested, but rather between epigenetic changes and further modification of the environment to favor the spread of these changes). As people are able to grasp images faster and faster, for example, cuts become faster still, pushing the boundaries of perception yet further and making the subliminal threshold a moving target. So with information intensive environments: as young people move further into hyper attention, they modify their environments so that they become yet more information intensive, for example by Web surfing while listening to a lecture or IMing while watching a movie.

Now let us circle back and connect these ideas with the earlier discussion of technicity and technical beings. We have seen that in the view of Simondon and others, technical objects are always on the move, temporarily reaching meta-stability as provisional solutions to conflicting forces. Such solutions are subject to having technical elements detach themselves from a given technical ensemble and becoming re-absorbed in a new technical ensemble, performing a similar or different function there. As Simondon laconically observed, humans cannot mutate in this way. Organs (which he suggested are analogous to technical elements) cannot migrate out of our bodies and become absorbed in new technical ensembles (with the rare exception of organ transplants, a technology that was merely a dream when he wrote his study). Rather than having technical elements migrate, humans mutate epigenetically through changes in the environment, which stimulate further adaptations in the milieu, which cause still further epigenetic changes in human biology, especially neural changes in the brain.

Weaving together the strands of the argument so far, I propose that attention is an essential component of technical change (although under-theorized in Simondon’s account), for it creates from a background of technical ensembles some aspect of their physical characteristics upon which to focus, thus bringing into existence a new materiality that then becomes the context for technological innovation. Attention is not, however, removed or apart from the technological changes it brings about. Rather, it is engaged in a feedback loop with the technological environment within which it operates through unconscious and nonconscious processes that affect not only the background from which attention selects but also the mechanisms of selection themselves. Thus technical beings and living beings are involved in continuous reciprocal causation in which both groups change together in related and indeed synergistic ways.

We have seen that technical beings embody complex temporalities, and I now want to relate these to the complex temporalities embodied in living beings, focusing on the interfaces between humans and networked and programmable machines. Within a computer, the processor clock acts as a drumbeat that measures out the time for the processes within the machine; this is the speed of the CPU measured in Hertz (or now, mega-Hertz). Nevertheless, because of the hierarchies of code, many information-intensive applications run much slower, taking longer to load, compile, and store. There are thus within the computer multiple temporalities operating at many different time scales. Moreover, as is the case with technical objects generally, computer code carries the past with it in the form of low-level routines that continue to be carried over from old applications to new updates, without anyone ever going back and re-adjusting them (this was, of course, the cause of the Y2K crisis). At the same time, code is also written with a view to changes likely to happen in the next cycle of technological innovation, as a hedge against premature obsolescence, just as new code is written with a view toward making it backward-compatible. In this sense too, the computer instantiates multiple, interacting, and complex temporalities, from micro-second processes on up to perceptible delays.

Humans too embody multiple temporalities. The time is takes for a neuron to fire is about .3 to .5 milliseconds. The time it takes for a sensation to register in the brain ranges from a low estimate of 80 milliseconds (Pockett, 2002) to 500 milliseconds (Libet et al., 1979), or 150 to 1,000 times faster than neural firing. The time it takes the brain to grasp and understand a high-level cognitive facility like recognizing a word is 200-250 milliseconds (Larson, 2004), or about six times the lower estimate for registering a sensation. Understanding a narrative can of course take anywhere from several minutes to several hours. These events can be seen as a linear sequence - firing, sensation, recognizing, understanding - but they are frequently not that tidy, often happening simultaneously and at different rates concurrently. Relative to the faster processes, consciousness is always belated, reaching insights that emerge through enfolded time scales and at diverse locations within the brain. For Daniel Dennett (1992), this means the consistent and unitary fabric of thought that we think we experience is a conflabulation, a smoothing over of the different temporalities that neural processes embody. Hence his “multiple drafts” model, in which different processes result in different conclusions at different times.

Now let us suppose that the complex temporalities inherent in human cognitive processing are put into continuous reciprocal causation with machines that also embody complex temporalities. What is the result? Just as some neural processes happen much faster than perception and much, much faster than conscious experience, so processes within the machine happen on time scales much faster even than neural processes and way beyond the threshold of human perception. Other processes built on top of these, however, may not be so fast, just as processes that build on the firing of neural synapses within the human body may be much slower than the firings themselves. The point at which computer processes become perceptible is certainly not a single value; subliminal perception and adaptive unconsciousness play roles in our interactions with the computer, along with conscious experience. What we know is that our experiences with the diverse temporalities of the computer are pushing us toward faster response times and, as a side effect, increased impatience with longer wait times, during which we are increasingly likely to switch to other computer processes such as surfing, checking email, playing a game, etc. To a greater or lesser extent, we are all moving toward the hyper attention end of the spectrum, some faster than others. Nicholas Carr (2010) in The Shallows: What the Internet is Doing to Our Brains documents many empirical studies demonstrating the cognitive effects of these changes, leading him to argue that we must take positive steps to preserve the cultural heritage of (what I have called) deep attention.

Going along with the feedback loops between the individual user and networked and programmable machines are cycles of technical innovation. The demand for increased information-intensive environments (along with other market forces such as competition between different providers) are driving technological innovations faster and faster, which can be understood in Simondon’s terms as creating a background of unrealized potential solutions (because of a low degree of technicity, that is, unresolved conflicts between different technical forces and requirements). Beta versions are now often final versions. Rather than debugging programs completely, providers rush them to market and rely on patches and later versions to fix problems. Similarly, the detailed documentation meticulously provided for programs in the 1970’s and 1980’s is a thing of the past; present users rely on help lines and lists of known problems and fixes. The unresolved background created by these practices may be seen as the technical equivalent to hyper attention, which is both produced by and helps to produce the cycles of technical innovation that result in faster and faster changes, all moving in the direction of increasing the information density of the environment.

This, then, is the context within which Steve Tomasula’s electronic multimodal novel TOC was created. TOC is, in a term I have used elsewhere, a technotext (Hayles, 2002): it embodies in its material instaniation the complex temporalities that also constitute the major themes of its narrations. Heterogeneous in form, bearing the marks of ruptures created when some collaborators left the scene and others arrived, TOC explores the relation between the creation and development of networked and programmable machines and human bodies, with both living and technical beings instantiating and embodying complex temporalities that refuse to be smoothly integrated into a rational and unitary scheme of a clock tic-tocing.

Modeling TOC

As a multimodal electronic novel, TOC offers a variety of interfaces, each of which has its own mode of pacing. The video segments, with voiceover and animated graphics, do not permit interaction by the user (other than to close them) and so proceed at a pre-set pace. The textual fragments, such as those that appear in the bell jar graphic, are controlled by the user scrolling down and so have a variable, user-controlled pace. Access to the bell jar fragments is through a player piano interface, which combines user-controlled and pre-set paces: the scrolling proceeds at a constant rate, but the user chooses when to center the cross-hair over a “hole” and click, activating the link that brings into view bell jar text fragments, brief videos, or cosmic datelines. Through its interfaces, TOC offers a variety of temporal regimes, a spectrum of possibilities enacted in different ways in its content.

An early section, a 33-minute video centering on a Vogue model, encapsulates competing and cooperating temporalities within a narrative frame. The narration opens and closes with the same sentence, creating a circular structure for the linear story within: “Upon a time, a distance that marked the reader’s comfortable distance from it, a calamity befell the good people of X.” The calamity is nothing more (or less) than them sharing the same present for a moment before falling into different temporal modes. Their fate is personified in the unnamed model, ripped from her daily routine when her husband engages “in a revelry that ended in horrible accident.” A car crash lands him into immediate surgery, which ends with him in a coma, kept alive by a respirator breathing air into his body and a pump circulating his blood, thus transforming him into an “organic machine.” Instead of hectic photo shoots and an adrenaline life style, the model now spends uncounted hours at his bedside, a mode of life that makes her realize the arbitrariness of time, which “existed only in its versions.” She now sees the divisions into hours, minutes, and seconds as an artificial grid imposed on a holistic reality, which the graphics visually liken to the spatial grid Renaissance artists used to create perspectival paintings.

Figure 1. Screen shot from Vogue model video.

In this image, the basic homology is between clock time and spatial perspective, a spatialization of temporality that converts it into something that can be measured and quantified into identical, divisible and reproducible units. The screen shot illustrates the video’s design aesthetic. The painter’s model , a synecdoche for the protagonist, is subjected to a spatial regime analogous to the temporal regime the Vogue model followed in her hectic days. The large concentric rings on the left side are reminiscent of a clock mechanism, visually reinforcing the narrative’s juxtaposition of the 360 seconds in an hour and the 360 degrees in a circle, another spatial-temporal conjunction. These circular forms, repeated by smaller forms on the right, are cut by the strong horizontal line of the painter and his model, along with the text underneath that repeats a phrase from the voiceover, “tomorrow was another day,” a banal remark that the model takes to represent the spatialization of time in general. The circular repetitions combined with a horizontal through line visually reproduce the linguistic form of the narration, which proceeds as a linear sequence encapsulated within the opening and closing repetitions, as well as appearing in the middle of the narrative with various syntactic permutations.

When the doctors inform the model “she could decide whether or not to shut her husband [i.e., his life support] off,” she begins contemplating a “defining moment, fixed in consciousness,” that will give her life a signifying trajectory and a final meaning. She (and the animated graphics) visualize her looming decision first as a photograph, in which she sees her husband’s face “like a frozen moment” unchanging in its enormity akin to a shot of the Grand Canyon, and then as two films in different temporal modes: “his coiled tight on the shelf, while hers was still running through a projector.” Her decision is complicated when the narration, in a clinamen that veers into a major swerve, announces “The pregnancy wasn’t wanted.”

We had earlier learned that, in despair over her husband’s unchanging condition, she had begun an affair with a “man of scientific bent.” Sitting by her husband’s bedside, she wonders what will happen if she cannot work and pay “her husband’s cosmic bills” when the pregnancy changes her shape and ends her modeling career, already jeopardized by her age compared to younger and younger models. As she considers aborting, the graphics and narration construct another homology: pull the plug on her husband, and/or cut the cord on the “growth” within. While her husband could go on indefinitely, a condition that wrenched her from measured time, the fetus grows according to a biological timeline with predictable phases and a clear endpoint. The comparison causes the metaphors to shift: now she sees her predicament not so much as the arrival of a “defining moment” as a “series of other moments” that “suggested narrative.” Unlike a single moment, narrative (especially in her case) will be defined by the ending, with preceding events taking shape in relation to the climax: “the end would bestow on the whole duration and meaning.”

The full scope of her predicament becomes apparent when the narration, seemingly off-handedly, reveals that her lover is her twin brother. In a strange leap of logic, the model has decided that “by sleeping with someone who nominally had the same chromosomes,” she really is only masturbating and not committing adultery. Admitting that she is not on an Olympian height but merely “mortal,” a phrase that in context carries the connotation of desire, she reasons that if time existed only in its versions, “why should she let time come between her brother and her mortality.” Her pregnancy is, of course, a material refutation of her fantasy. Now, faced with the dual decisions of pulling the plug and/or cutting the cord, she sees time as an inexorable progression that goes in only one direction. This inexorability is contested in the narrative by the perverse wrenching of logical syllogisms from the order they should ideally follow, as if in reaction against temporal inevitability in any of its guises. The distortion appears s repeatedly, as if the model is determined not to agree that A should be followed by B. For example, when she asks her lover/brother why time goes in only one direction, he says (tautologically) that “disorder increases in time because we measure time in the direction that disorder increases.” In response, she thinks “that makes it possible for a sister to have intercourse with her brother,” a non-sequitur that subjects the logic of the tautology to an unpredictable and illogical swerve.

As much as the model tries to pry time from sequentiality, however, it keeps inexorably returning. To illustrate his point about the Second Law of Thermodynamics giving time its arrow, the lover/brother re-installs temporal ordering by pointing out that an egg, being broken, never spontaneously goes back together. While the graphics show an egg shattering - an image recalling the fertilization of the egg that has resulted in the “growth” within - he elaborates by saying it is a matter of probability: while there is only one arrangement in which the egg is whole, there are infinite numbers of arrangements in which it shatters into fragments. Moreover, he points out that temporality in a scientific sense manifests itself in three complementary versions: the thermodynamic version, which gives time its arrow; the psychological version, which reveals itself in our ability to remember the past but not the future; and the cosmological version, in which the universe is expanding, creating the temporal regime in which humans exist.

Faced with this triple whammy, the model stops trying to resist the on-goingness of time and returns to her earlier idea of time as the sequentiality of a story, insisting “a person had to have the whole of narration” to understand its meaning. With the ending, she thinks, the story’s significance will become clear, although she recognizes that this hope involves “a sticky trick that depended on a shift of a tense,” that is, from present, when everything is murky, to past, when the story ends and its shape is fully apparent. The graphics at this point change from the iconic and symbolic images that defined the earlier aesthetic to the indexical correlation of pages falling down, as if a book were being riffled through from beginning to end. However, the images gainsay the model’s hope for a final definitive shape, for as the pages fall, we see that they are ripped from heterogeneous contexts: manuscript pages, typed sheets, a printed bibliography with lines crossed out in pen, an index, a child’s note that begins “Dear Mom,” pages of stenographic shapes corresponding to typed phrases. As if in response to the images, the model feels that “a chasm of incompletion opened beneath her.” She seems to realize that she may never achieve “a sense of the whole that was much easier to fake in art than in life.”

With that, the narrative refuses to “fake” it, opting to leave the model on the night before she must make a decision one way or another, closing its circle but leaving open all the important questions as it repeats the opening sentence: “Upon a time, a distance that marked the reader’s comfortable distance from it, a calamity befell the good people of X.” We read the same sentence, but in another sense we read an entirely different one, for the temporal unfolding of the narrative has taught us to parse it in a different way. “Upon a time” differs significantly from the traditional phrase “Once upon a time,” gesturing toward the repetitions of the sentence that help to structure the narrative. The juxtaposition of time and distance (“upon a time, a distance”) recalls the spatialization of time that the model understood as the necessary prerequisite to the ordering of time as a predictable sequence. The “comfortable distance” that the fairy-tale formulation supposedly creates between reader and narrative is no longer so comfortable, for the chasm that opened beneath the model yawns beneath us as we begin to suspect that the work’s “final shape” is a tease, a gesture made repeatedly in *TOC’*s different sections but postponed indefinitely, leaving us caught between different temporal regimes.

The calamity “that befell the good people of X,” we realize retrospectively after we have read further, spreads across the entire work, tying together the construction of time with the invention of the “Difference Engine,” also significantly called the “Influencing Machine.” The themes introduced in the video sequence continue to reverberate through subsequent sections: the lone visionary who struggles to capture the protean shapes of time in narrative; the contrast between time as clock sequence and time as a tsunami that crashes through the boundaries of ordered sequence; the transformations time undergoes when bodies become “organic machines”; the mechanization of time, a technological development that conflates the rhythms of living bodies with technical beings; the deep interrelation between a culture’s construction of time and its socius; and the epistemic breaks that fracture societies when they are ripped from one temporal regime and plunged into another.

Capturing Time

Central to attempts to capture time is spatialization. When time is measured, it frequently is done so through spatialized practices that allow temporal progress to be visualized - hands moving around an analogue clock face, sand running through an hourglass, a sundial shadow following the sun across the sky. In the “Logos” section of TOC, the spatialization of time exists in whimsical as well as mythic narratives. The user enters this section by using the cursor to “move” a pebble into a box marked “Logos” (the other choice is “Chronos,” which leads to the video discussed above), whereupon the image of a player piano roll begins moving, accompanied by player-piano type music.

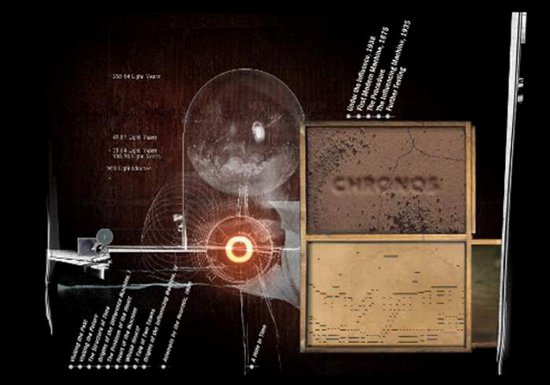

Figure 2. Screen shot from “Chronos/Logos” section showing player piano interface.

Using a cross-hair, the user can click on one of the slots: blue for narrative, red for short videos, green for distances to the suns of other planetary systems. The order in which a user clicks on the blue slots determines which narrative fragments can be opened; different sequences lead to different chunks of text being made available.

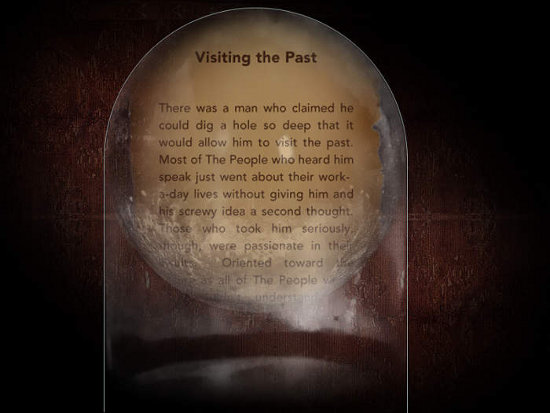

The narratives are imaged as text scrolls on old paper encapsulated within a bell-jar frame, as if to emphasize their existence as archival remnants. The scrolls pick up on themes already introduced in the “Chronos” section, particularly conflicting views of time and their relation to human-machine hybrids. The difficulty of capturing time as a thing in itself is a pervasive theme, as is the spatialization of time. For example, capturing the past is metaphorically rendered as a man digging a hole so deep that his responses to questions from the surface come slower and slower, thus opening a gap between the present of his interlocutors and his answers, as if he were fading into the past. Finally he digs so deep that no one can hear him at all, an analogy to a past that slips into oblivion.

Figure 3. Screen shot of bell jar graphic with “Visiting the Past” narrative.

The metaphor for the future is a woman who climbs a ladder so tall that the details of the surface appear increasingly small, as if seen from a future perspective where only large-scale trends can be projected. As she climbs higher, family members slip into invisibility, then her village, then the entire area. In both cases, time is rendered as spatial movement up or down. The present, it seems, exists only at ground level.

The distinction between measured time and time as temporal process can be envisioned as the difference between exterior spatialization and interior experience: hands move on a clock, but (as Bergson noted) heartbeat, respiration, and digestion are subjective experiences that render time as Duration and, through cognitive processes, as memory. The movement from measured time to processual temporality is explored through a series of fragments linked to the Difference Engine, also called the Influencing Machine, as we have seen. The former, of course, alludes to Charles Babbage’s device, often seen as the precursor to the modern computer (more properly, the direct precursor is his Analytical Engine). In “Origins of the Difference Engine, 1”, for example, the narrative tells of “a woman who claimed she had invented a device that could store time.” Her device is nothing other than a bowl of water. When she attempts to demonstrate it to the townspeople, who have eagerly gathered because they can all think of compelling reasons to store time, they laugh at her simplicity. To prove her claim, she punches a hole in the bowl, whereupon the water runs out. The running water is a temporal process, of course, but the bowl can be seen as storing this process only so long as the process itself does not take place. The paradox points to a deeper realization: time as an abstraction can be manipulated, measured, and quantified, as in a mathematical equation; time as a process is more protean, associated with Bergson’s Duration rather than time in its spatialized aspects.

In a series of narrative fragments the bowl of water continues to appear, associated with a linage of visionaries who struggle to connect time as measurement with time as process. In “Heart of the Machine,” a woman who first tried to stop time as a way to preserve her youth imperceptibly becomes an old hermit in the process. As she ages, she continues to grapple with “the paradox of only being able to save time when one was out of time.” She glances at the mattress and springs covering her window, an early attempt to insulate the bowl of water from outside influences, and then thinks of the water running as itself a spring. Thereupon “she realized that the best humans would ever be able to do, that the most influential machine they could ever make would actually be a difference engine - a machine, or gadget, or engine of some sort that could display these differences and yet on its face make the vast differences between spring and springs - and indeed all puns - as seeming real as any good illusion.” The difference engine, that is, is a “gadget” that can operate like the Freudian unconscious, combining disparate ideas through puns and metonymic juxtapositions to create the illusion of a consistent, unitary reality. The computer as a logic machine with its origins in Babbage’s Difference Engine has here been interpreted with poetic license as a machine that can somehow combine differences - pre-eminently the difference between measured time and processual temporality - into a Logos capable of influencing entire human societies.

What could possibly power such an exteriorized dream machine? The woman has a final intuition when “at that very instant, she felt a lurch in the lub-dub of her heart, confirming for her the one course that could power such a device, that could make time’s measurements, its waste and thrift not only possible but seemingly natural … .” That power source is, of course, the human heart. Subsequent narratives reveal that her heart has indeed been encapsulated within the Influencing Machine, the time-keeping (better, the time-constructing) device that subsequently would rule society. The dream-logic instantiated here conflates the pervasiveness and ubiquity of contemporary networked and programmable machines that actually do influence societies around the globe, with a steampunk machine that has as its center a human heart, an image that combines the spatialization of time with the Duration Bergson posed as its inexplicable contrary. Such conflation defies ordinary logic in much the same way as the Vogue model’s non-sequiturs defied the constructions of time in scientific contexts. Concealed within its illogic, however, is a powerful insight: humans construct time through measuring devices, but these measuring devices also construct humans through their regulation of temporal processes. The resulting human-technical hybridization in effect conflates spatialized time with temporal duration.

Figure 4. Screen shot of the Influencing Machine just before it breaks (at 12:00:00).

The feedback loop reaches its apotheosis in a video segment that begins, “When it came, the end was catastrophic,” accessed by clicking on a cloudy section in the piano roll. The video relates an era when the triumph of the Influencing Machine seems absolute as it produces measured and spatialized time; “the people had become so dependent on time that when they aged, it permeated their cells.” The animated graphics extend the theme, showing biological parts - bones, egg-like shapes, moving microscopic entities that seem like sperm - moving within the narrative frame of time measured. Spatialized time also permeated all the machines, so that everything became a function of the “prime Difference Engine,” which “though it regulated all reality could not regulate itself.” As with the historical Difference Engine Babbage created, the Influencing Machine had no way to self-correct, so that “minute errors accumulated” until one day, “like a house of cards, time collapsed.” Like the bowl of water associated with the female mystic’s humiliation but now magnified to a vast scale, the transformation from time measured to processual time has catastrophic effects. “Archeologists later estimated that entire villages were swept away, because they had been established at the base of dikes whose builders had never once considered that time could break.”

As the city burns and Ephema escapes with her followers, her “womb-shaped tears” as she weeps for her destroyed city “swelled with pregnancy,” presumably the twins Chronos and Logos, which an earlier video had explained were born in different time zones, rendering ambiguous which was born first and so had the right of primogeniture. The father, the people speculate, must be none other than the Difference Engine itself, an inheritance hinting that the struggle between measured time and processual time has not ended. Indeed, when the queen establishes a new society, her glass fingernails spread throughout the populace, so that soon all the people have hour-glass fingernails. During the day, “the sand within each fingernail ran toward their fingertips.” To balance this movement of time, the people must sleep with their arms in slings so that the sand runs back toward their palms. Nevertheless, “It was only a matter of time until a person was caught with too much sand in the wrong places,” whereupon he dies. This conflation of time as experience and time as measured, in another version of human-technical hybridity, is given the imprimatur of reality, for such a death is recorded as resulting from “Natural Causes,” a conclusion reinforced by a graphic of the phrase appearing in copperplate handwriting.

In TOC, time is always on the move, so that no conclusion or temporal regime can ever be definitive. In a text scroll entitled “The Structure of Time,” the narrative locates itself at “the point in history when total victory seemed to have been won by the Influencing Machine and its devotees, when all men, women, and thought itself seemed to wear uniforms.” An unnamed female protagonist (perhaps the mystic whose heart powers the Influencing Machine) envisions the Influencing Machine as “an immense tower of cards,” a phrase that echoes the collapse of time “like a house of cards.” The Influencing Machine is usually interpreted as a vertical structure in which each layer builds upon and extends the layer below in an archeology of progress - sundials giving way to mechanical clocks, digital clocks above them, atomic clocks higher still, then molecules “arranged like microscopic engines” (nanotechnology?), and finally, above everything “a single atom” moving far too fast for human perception. The protagonist, however, in a moment of visionary insight inverts the hierarchy, so that each layer becomes “subservient to the layers below, and could only remain in place by the grace of inertia.” Now it is not the increasing acceleration and sophistication that counts but the stability of the foundation. All the clocks rest on the earth, and below that lies “the cosmos of space itself,” “the one layer that was at rest, the one layer against which all other change was measured and which was, therefore, beyond the influence of any machine no matter what kind of heart it possessed, that is, the stratum that made all time possible - the cold, infinite vastness of space itself.” Echoing the cosmological regime of time that the Vogue model’s brother says makes the other temporal regimes possible, the mystic’s vision reinstates as an open possibility a radically different temporal regime. Temporal complexity works in TOC like a Moebius strip, with one temporal regime transforming into another just as the outer limit of the boundary being traced seems to reach its apotheosis.

This dynamic implies that the Influencing Machine will also reinstate itself just when the temporality of the cosmos seems to have triumphed. So the video beginning with the phrase, “When it came, the end was catastrophic” concludes with the emergence of The Island, which I like to think of The Island of Time. Divided into three social (and temporal) zones, The Island explores the relation between temporal regimes and the socius.

Figure 5. Screen shot of “The Island” section.

The left hand (or Western) side believes in an ideology of the Past, privileging practices and rituals that regard the Present and Future either as non-existence or unimportant. The middle segment does the same with the Present, and the right or Easternmost part of The Island worships the Future, with its practices and rituals being mirror inversions of the Westernmost portion. Each society regards itself as the true humans - “the Toc, a name that meant The People” - while the others are relegated to the TIC, non-people excluded from the realm of the human. Echoing in a satiric vein the implications of the other sections, The Island fragments illuminate the work’s title, “TOC.” “TOC” is a provocation, situated after a silent “Tic,” like a present that succeeds a past; it may also be seen as articulated before the subsequent “Tic,” like a present about to be superseded by the future. In either case, “TOC” is not a static entity but a provisional stability, a momentary pause before (or after) the other shoe falls. Whereas Simondon’s theory of technics has much to say about technical than living beings, TOC has more to reveal about human desire, metaphors, and socius than it does about technical objects. Often treating technical objects as metaphors, TOC needs a theory of technics as a necessary supplement, even as it also instantiates insights that contribute to our understanding of the co-constitution of living and technical beings.