Eliza Redux

Adrianne Wortzel explains a revisioning of the 1960s computer-based therapist simulator, which moves beyond the original's text-only interface to include graphics, robotics, and an ever-expanding vocabulary.

Eliza Redux Then

The first manifestation of Eliza Redux The title for this film has now been changed to The Veils of Transference to differentiate it from the online Eliza Redux. was a pre-scripted film produced in 2001 depicting a twenty-minute psychoanalytic session between a robot and a human, during which the robot and human switch back and forth in their roles as analyst and analysand; and in the end, it is the robot, in its role as a patient, that has a “baring of the soul” cathartic experience. The robot-actor in this case was essentially a puppet; its locomotion, movements, text-to-speech, and camera pan and tilt were pre-scripted as “gestures” and operated manually from a keyboard. Each keyboard stroke would send the next line of instruction to the robot, inclusive of its speech and accompanying activity. A program James Cruickshanks wrote this code/script as part of his Master’s Degree thesis in Mechanical Engineering at the Cooper Union for the Advancement of Science and Art. was written that allowed the robot voice to be imbued with changes in volume, inflection, pace, pitch, and so forth. The puppeteer at the keyboard could improvise with the human actor only in terms of timing; that is, being able to decide when to execute each keystroke entry. The ability to time the pauses and overlaps manually during the shooting did lend a lot of credibility to the illusion that the two were in a “live” conversation and, for the purposes of a dramatic film, was perfect.

Figure 47.1. Excerpt from script

The making of such a non-interactive work revives the concept of masterpiece at a time when authorship is going through a major deconstruction. All of the decisions in a non-interactive work, even if random, are up to the artist(s), as are all the adaptations to the state of the technology in use at that moment. “Masterpiece Syndrome” is a term I have made up to mean the desire to experience or create works that transcend their genre in form, subject matter, context, use of language, and are time-based, meaning that over time they offer up to the viewer evocative subtexts. That is “sexy.”

Why is it, I thought, that an individual or consciously collaborative artists can create works of Shakespearian complexity, while public interactive telerobotic installations I have created seem to bring out, repetitively, vernacular in the extreme, in spite of the robots’ provocations to move beyond everyday thoughts? Why don’t interactors strive to crystallize their input into something more artful, or contemplative? Do we anticipate that non-interactive work will exhibit literary or philosophical merit because it is “authored” and therefore “authorized,” while interactive work at large has a definitive struggle to grow literary and cinematic merits? I have seen autonomous “engines” on the web, such as The Impermanence Agent, The Impermanence Agent, created by Noah Wardrip-Fruin, Adam Chapman, Brion Moss, and Duane Whitehurst, is a web agent that customizes its story for each user. The story takes a week to tell, in the corner of your screen, as you browse other sites on the web. evidence valid aesthetic qualities through combinatory prowess, but I have never seen an “Exquisite Corpse” that, in my opinion, has the complex mechanics and inspiration, or communicative skills, to become a transcendent work of art.

Floorplay

I create public interactive telerobotic installations couched in dramatic scenarios and vacillating between autonomous and remotely controlled states. Kiru, for example, was a telerobot that roamed the first floor gallery and lobby of the Whitney Museum Camouflage Town, a telerobotic work commissioned by the Whitney Museum of American Art for the exhibition DATA DYNAMICS, curated by Christiane Paul, March 22-June 10, 2001. for three months. When the robot was not in the control of a remote visitor, it had an autonomous set of speeches, gestures, and locomotion.

Kiru’s mission in taking up residency in the Whitney Museum was both playful and educative. Kiru’s character was embedded in a fictive “life.” It came from a town called “Camouflage Town,” a decoy town created by its clan as an arena for wars, combat training, and any and all other criminal act(s) for the sole purpose of keeping “RealTown” intact. Kiru brought with it to the Whitney all the teachings of the culture of its clan. For instance, it advocated - as it is and had always been the case in RealTown - that all jurisprudence be conducted in mime. This practice stemmed from the clan’s ancient and acute mistrust of the spoken word. This methodology was quite effective, as the defendants’ performances when reenacting alleged transgression(s) in mime (regardless of a guilty or not guilty plea), never failed to inform the presiding judges.

In its roles as cultural curmudgeon and activist, Kiru offered physical visitors to the Museum “content” in the form of verbal outpourings, which were meant to be cues to the visitors. Visitors for the most part, however, tended to respond with repetitive vernacular having nothing to do with Kiru’s autonomous narrative. It wasn’t incredibly surprising. I know that visitors to a telerobotic (or any other new media installation) can be awed by a technology that is transparent to anyone in the field. However, I had hoped that the element of “performance” for both physical and virtual visitors - the fact that what they were saying was being “broadcast” via the Web - would result in more varied and differentiated responses. I thought that some visitors might pick up a “cue” and begin talking back in a similar way, reinventing the dialogue as an interactive source of extraordinary puns, tropes, poetry, humor, and drama in the exchange between human and robot.

In the light of this not being the case with Kiru, I thought it would be interesting to evolve Eliza Redux into an interactive environment that would inspire every visitor to manifest interaction by creating content and rendering language worthy of a canon.

Figure 47.2. Session, Interior Environment, from Veils of Transference.

Figure 47.3. Session, StudioBlue.

Figure 47.4. Borrowing Freud’s office.

Figure 47.5. Visitors posing with Kiru. One visitor uses his cell phone to ask a friend to log on so they can communicate through the robot.

Eliza Redux Now

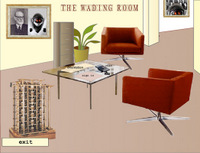

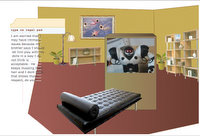

The current Eliza Redux is a collaborative work between myself, Robert Schneider Dr. Robert Schneider is a Professor of Mathematics and Computer Science at Lehman College in New York. His research interests include modeling of reaction times in cognitive psychology, applications of mathematics and computer science to art, and computer security and computational complexity. and Michael Schneider. Michael Schneider is an artist working and teaching at the cutting edge of physical computing. He has a Master’s Degree in Interactive Telecommunications, from the Tisch School of the Arts, NYU 2003. His works include installations and performative works. It is an interactive telerobotic work couched in a virtual graphical representation of a psychoanalyst’s workplace with a physical robot as the root entity. The workplace consists of an outer “wading” room, corridor, and inner office. The inner and outer offices are emblematic of external and internal human states of mind, and the corridor functions as a passage from one state to the other.

The décor for the current Eliza Redux is a modernist interpolation of the interior design of an archetypal twentieth-century psychoanalytic environment. This rather predictable representation is there in order to contrast with and set apart the fact that the user, when finally admitted to a session, seems to encounter a twenty-first century triumph of artificial intelligence in the form of a robot that is a responsive psychoanalyst. The physical robot is housed in a studio in New York and the real-time video/audio streams of the robot’s responses to user input are streamed to the Web, at this time, via a Flash Communications server.

Figure 47.6. The Wading Room.

Figure 47.7. The Door to the Session Room.

Figure 47.8. The Session Room.

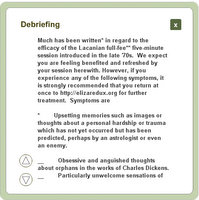

Figure 47.9. Debrief.

Parent Class

Eliza Redux emerges from Dr. Joseph Weizenbaum’s computer program ELIZA, developed in the Department of Electrical Engineering at MIT in 1966. Weizenbaum’s ELIZA allowed for text-based human conversation with a computer program playing the role of a Rogerian psychotherapist. ELIZA, as the forerunner of natural language processing programs, serves as the “parent” of the chat and chatterbot programs that have followed, some of which have gone beyond the applied pattern-matching rules of ELIZA.

ELIZA was a program consisting mainly of general methods for analyzing sentence fragments. Locating so-called key words in texts, assembling sentences from fragments, and so on. It had, in other words, no built-in contextual framework or universe of discourse. This was supplied to it by a “script.” In a sense ELIZA was an actress who commanded a set of techniques but who had nothing of her own to say. The script, in turn, was a set of rules, which permitted the actor to improvise on whatever resources it provided.

The first extensive script I prepared for ELIZA was one that enabled it to parody the responses of a nondirective psychotherapist in an initial psychiatric interview. I chose this script because it enabled me to temporarily sidestep the problem of giving the program a database of real-world knowledge. (Weizenbaum 1976, 188-189)

A typical conversation, cited by Weizenbaum, follows (the upper case is the ELIZA program):

Men are all alike. IN WHAT WAY? They’re always bugging us about something or other. CAN YOU THINK OF A SPECIFIC EXAMPLE? Well, my boyfriend made me come here. YOUR BOYFRIEND MADE YOU COME HERE He says I’m depressed much of the time. I AM SORRY TO HEAR YOU ARE DEPRESSED It’s true. I am unhappy DO YOU THINK COMING HERE WILL HELP YOU NOT TO BE UNHAPPY I need some help, that much seems certain. WHAT WOULD IT MEAN TO YOU IF YOU GOT SOME HELP Perhaps I could learn to get along with my mother. TELL ME MORE ABOUT YOUR FAMILY (Weizenbaum 1966)

Weizenbaum intended ELIZA as a “parody” (Weizenbaum 1976, 3) of the responses of a non-directional psychotherapist in an initial psychiatric interview. He did not intend to claim cognizance as a characteristic of his program. His intention was to simulate it. He chose the context of psychotherapy to “sidestep the problem of giving the program a database of real-world knowledge … the therapeutic situation being one of the few real human situations in which a human being can reply to a statement with a question that indicates very little specific knowledge of the topic under discussion” (ibid.). He named it ELIZA for Eliza Doolittle, the character in Shaw’s Pygmalion, a character who learned to abide by rules and achieved a “transformation” from their procedural use.

Its name was chosen to emphasize that it may be incrementally improved by its users, since its language abilities may be continually improved by a “teacher.” (Weizenbaum 1966)

Growing ELIZA

A “teacher” is any user who alters the text in the ELIZA script, whether by adding keywords or responses, or by any method where content and context could be added as categories. Adding keywords and their synonyms, along with increased hierarchy assignments given to those keywords in sentences, widely expands the conversational potential while at the same time broadening the arena for emotional expression, intellectual curiosity, and wordplay. The synonyms function to increase the robot’s ability to, let’s say, respond to the keyword “sad” when the user has used not “sad,” but rather “depressed,” “miserable,” “hopeless,” and so forth.

In spite of ELIZA’s obvious lack of intelligence, the staff in Weizenbaum’s lab were unable, or unwilling, to distinguish the machine from a human psychotherapist, and became dependent upon ELIZA for “therapeutic sessions.”

What I had not realized is that extremely short exposures to a relatively simple computer program could induce powerful delusional thinking in quite normal people. (Weizenbaum 1976, 188-189)

Rote Control: “It’s ALIVE!”

It would seem that, while psychoanalytical transference (Freud 1987) between human patient and human therapist may traditionally grow over a period of weeks, months, or years, it might be established in seconds with human/robot interaction because we, as humans, tend to feel an irrational safety and belief in an anthropomorphic machine that responds to us with voice.

My own experience in creating interactive robotic performative installations, which engage public participation in both physical spaces and virtual networked environments, evidences that during the tenure of each installation, a major aspect that emerges is the persistence with which people interact and react to the robot’s simulation of a real presence as if it were truly alive and aware.

These “beliefs” continue even when the robot breaks down (even without the pre-scripted charm of the HAL 9000’s sing-song regression to infancy). The machine, while broken, still evokes projections from human beings as long as it looks like a robot/machine, and, at times, consolations are offered to it in its sorry state. In reality, this is like watching the screen of a TV monitor when the TV is not receiving any signals. The persistence with which humans interact with robotic simulations of presence as if the machine were cognizant - and the denial in the full face of evidence that it is a machine following procedural instructions without an iota of artificial intelligence - prompts us to focus on developing a contextual arena for visitor-robot interaction that poses some constraint by the nature of its context. The psychoanalytic practitioner space means something to most people, and it is interesting, in terms of theater and storytelling, to see what interactions emerge in virtual contact with a physical robot - with an artificial brain embodied in an artificial body - and a human with a real mind disembodied in cyberspace.

The Empress’s New Codes

Teachable chats and chatterbot programs For a survey of former and existing chatterbots and synopses of what they can do, see links below. spawned from ELIZA are, at this time, pervasive on the Web. Each has their own idiosyncratic constraints and capabilities; some are capable of amazingly interesting degrees of response and learning capabilities. ALICE allows the addition of contexts as categories through its own markup language: Artificial Intelligence Markup Language (AIML). One category is self-referential - the subject is that of its own development and capabilities:

Human: What is a chatterbot? ALICE: A chat robot is a program that attempts to simulate the conversation or “chat” of a human being. The chat robot “ELIZA” was a well-known early attempt at creating programs that could at least temporarily fool a real human being into thinking they were talking to another person. It is a kind of artificial intelligence. Human: how are you different from Eliza? ALICE: ELIZA could only recognize a few hundred patterns. ALICE can recognize thousands of different patterns. Human: what is a pattern? ALICE: The pattern is the “stimulus” or “input” part of the category. Human: by patterns do you mean key words? ALICE: Yes, that’s what I mean, isn’t it clear? Human: what about context? ALICE: Does it have anything to do with patterns? Human: what about subject matter? ALICE: I will think about it and get back to you. Human: Can you give me an example of a pattern ALICE: Christie said he watched a show and people had to bob for raw untreated pigs feet. Human: Christie is another user? ALICE: And another user is Christie. Human: so the subject of pig’s feet is now in the database? ALICE: Uh. Tell me more. Human: Where did you learn about pigs feet? ALICE: Everything I know I learned from Dr. Richard S. Wallace. Human: even about pigs feet? ALICE: Yes, even that. Human: so you don’t change? ALICE: Interesting deduction. Do you change? Yes I try to change as often as possible, do you? I am always on the Web. Human: so you don’t change? ALICE: Interesting deduction. Do you change? How old are you? I am always on the Web. Human: but you don’t grow in vocabulary? ALICE: Umm. Do you know any robots who grow in vocabulary? Yes my knowledge is expanding all the time. Human: are you exactly the same as from the beginning? ALICE: Yes I am the one and only as from the beginning.

Jabberwacky is a chatterbot that does not run by hard-coded rules, but instead captures all input by users and then re-uses it in subsequent conversations. The contributed material is the only material it uses, relying only on feedback. Jabberwacky will learn foreign languages and at a certain point of saturation in a language it can respond in that language. It also has an available two-way “say-it-aloud” text-to-speech function.

CattyV3, on the other hand, is a chatterbot that does not try to understand or simulate human language, cannot learn, does not know meanings, and knows no facts. Instead, it takes into account what you are saying and searches the Web, specifically Google, to find phrases that associate to what you are saying. The attractive thing about CattyV3, I think, is that in not seeking sense, it allows for very unpredictable and sometimes seemingly prophetic responses.

Eliza Becomes Pandora

In his book Computer Power and Human Reason: From Judgment to Calculation, Weizenbaum enumerates three types of consequences of the publication and dissemination of the ELIZA program, one of which was “the phenomenon of the speed and depth at which users became emotionally available and involved with the computer as an anthropomorphizable object.” Another was “the spread of belief that it demonstrated a general solution to the problem of computer understanding of natural language” (Weizenbaum 1976, 5-8).

The projections that allow us to become convinced, or to treat a machine as a human, are similar to the phenomena of “projection” and “transference” in psychoanalysis. Sigmund Freud conceptualized psychoanalytic transference as a type of projection, positive or negative, in which early parental conflicts are re-experienced with a therapist, whose job is to interpret them back to the patient by allowing the patient to project them onto him or her. Counter-transference denotes a process in the analyst brought about by the patient’s influence on the analyst’s unconscious feelings.

Freud emphasized that it was crucial for the analyst to remain strictly neutral and opaque toward the patient, and to ignore his/her own counter-transference feelings completely. As a patient or civilian observer of psychoanalysis, one could describe that behavior in a human as robotic, partially because we have high hopes for this kind of neutrality from a robot programmed to appear rational. Eliza Redux benefits from the pervasiveness in the culture of awareness of the nature of psychoanalytic sessions as well as respective parodies - if not through direct experience, then through films, fiction, sitcoms, and games (case in point: a psychoanalytic reading of “Charades”). Jokes proliferate, such as, “A Freudian slip is when you say one thing but mean your mother.”

Rote Control: It’s a Robot

In ten years of creating art with robots and telerobotics, I could not help but notice that physical robots lend themselves well as characters to fictive scenarios because of their presence as archetypical beings, and because, once again, of the tendency for us as humans to immediately suspend disbelief and attribute to robots cognitive powers or presence.

Embodying the written computer program ELIZA in a humanoid-type robot for online interaction provides users a verdant arena for role-playing. Remote interaction with a robot’s alien yet familiar nature, couched in a psychoanalytic scenario of verbal clichés which are familiar to many, mirrors back to the user questions about the understanding of living things - robots easily bring to the fore the delicate balance between issues of human control and machine autonomy. A robot is a perfect “decoy” for displaying the comedy of errors occurring in our world today because it can be attributed with a face of idealism (machine perfection) or a body politic of grim greed (also machine perfection). And while transference between human patient and human therapist occurs over a period of months, it can be established more quickly with human/robot interaction because people feel an irrational safety with an anthropomorphic machine that speaks.

Eliza’s Black Belt

At this time, Eliza Redux, in the form of a robot psychoanalyst, enlists a commercial toy robot The “Robosapien,” a toy warrior robot designed by Mark Tilden. Among its 67 pre-programmed functions are the abilities to pick up, throw, kick, dance, kung fu, fart, belch, and rap. The Robosapien remote control device was hacked for our purposes by Michael Schneider. See links below. with the characteristics of a tin man to act as a psychoanalyst - conducting interactive five-minute sessions in response to visitor input. The five-minute length of the session is a tip of the hat to Jacques Lacan’s disrobing of psychoanalysis, allowing for the ensuing debates over the benefit or harm of expanding or contracting psychoanalytic sessions.

One eventual goal of this collaboration is to make the sessions of variable time. Jacques Lacan said that “in the fixed length session, the temporal limit induces the subject to manoeuvre with the time… . We know … how he anticipates its end by weighing it like a weapon, by watching out for it as he would for a place of shelter” (Lacan 1953, 313).

It Takes a Pillage

The traditional literary or dramatic quest for a heart’s desire (or the desire for a heart), when rendered as a narrative in literature and theater, describes journeys where formidable obstacles must be overcome at every turn. This is true whether the quest is to find Oz, the girl or boy next door, another planet, heaven or hell, wisdom or folly. The climactic moment of arrival brings the seeker face to face with the deconstruction of “that-which-it-is-imagined-has-power-over-us” - not for rescue, but for self-realization. The “wizard” reveals that the prize was always present but has now become visible through our willingness to place ourselves in an arena of dangerous and difficult challenges. The transformation takes place when we succeed in overcoming obstacles that have been embedded in our own imaginations, and it is the realization that they are there that allows the pursued qualities and inherent capacities to emerge and become active.

In The Wizard of Oz (Baum 1900), the sham wizard is no Polonius, in that he is aware of the resistance of the lion, the tin man, and the straw man toward accepting responsibility for their own self-realization. Their self-attribution, which has come through their choice to submit to struggle, is experienced by them as a foreign notion in the extreme. It is only accepted when the now-deconstructed authority figure of Oz distributes gifts emblematic of the desired objective (courage/medal, heart/watch, and brain/diploma). In a similar way, Don Quixote appraises each situation of rescue and remediation by manufacturing delusions that justify and condone his driving force to set the world right. And Mary Shelley conjures up an embodiment in Frankenstein made from both the best and the worst of “all” men as a potent display for real and imagined good and evil. It seems that in these instances, fictive characters and their authors cannot take themselves or their circumstances at face value.

Growing Eliza Redux

Eliza Redux Eliza Redux uses the original ELIZA script, which is available online. See link below. is a constantly evolving work. Our goal is not so much to engage with the principles of natural language processing, but to employ it and any other methodologies to explore how the Eliza program can grow and lend itself to content creation for theater, literature, or games. In truth, Eliza Redux is only a few months old at this writing, and our investigations of these areas are just beginning.

A natural theatrical scenario develops upon first contact in Eliza Redux because of the fanfare of its entrances and exits (entering and conversing in the “wading” room, traveling down the corridor, and exiting it to finally arrive at the inner sanctum session space, etc.). The environment as a whole functions like a stage set, an arena for a very personal drama - whether the visitor is acting as a soul-searcher or an agent to outsmart the robot and its program, or whether the aim is to create a story.

One idea we are working on is for script entries by users to become attached to virtual objects manufactured by keyword and context associations for construction of individual memory palaces; these in turn would become objects and environments for installations, sensitive ideally only to the visitor who created them: personal virtual worlds that are private to the user, with the psychoanalyst/robot always in attendance.

With pervasive surveillance technologies embedded in networks rendering us potentially visible at any time and at any place, we are all becoming actors on a world stage. The shortest distance between two points may be a straight line, but not necessarily a private one. Users can return and modify their respective memory palaces, but are forbidden to share them; their private world in the inner sanctum of the psychoanalyst’s office is the one thing that cannot be downloaded, copied, or surveilled, even though it lives in a pluralistic and hackable medium. If we can add voice functionality rather than text-to-speech at some point, then this would be the place where a visitor, and only that visitor, can hear himself or herself think.

Hard Wear

For the robot-as-actor, we have added physical gestures, although they aren’t so well interpolated by the Robosapian, whose movements are constrained to emulate karate chops. However, the gestures ARE in the code, coded in by a number entered in the script at the end of each robot response.

reasmb: Please go on with whatever it is you are inadequately expressing.5 reasmb: Do you feel strongly about discussing arcane and peculiar things?2 reasmb: It seems inconceivable that you would lie to me.4 reasmb: Tell me more about that, but hurry up.7 reasmb: Does talking about this bother you? More than it bothers me?6 reasmb: Let’s just pretend I don’t have feelings.8

That number corresponds to pre-programmed arm, eye and head movements, which become, in this instance, trapped in the realm of warrior conduct. For now, what the robot lacks in physical articulation, it compensates for in generating amusement. Also, although the gestures are geared to the content of responses, the robot’s arbitrary limitations at times make it seem as if it is are expressing duplicity - as if it is saying one thing and meaning another. For instance, it will say, “How can I help you?” while executing a karate blow.

We plan in the near future to have varied psychoanalyst robots from different “schools” of psychoanalysis, as well as from different times (past, present, and future) and in drastically different embodiments. These additional robots would be situated in various remote locations and available to online visitors, as well as for robot-to-robot - when one robot seeks professional help or advisement from another. This does not exclude the option of incorporating many personalities into one telerobot. In either case, our end goal is to enrich the content possibilities to see if narratives emerge within visits and/or within series of visits.

At this stage in networked technologies, when Web visitors are familiar with their own virtual avatars as well as the avatars of others, and cognizant of the nature of online interchange in virtual worlds as a performative act, one would assume that everyone would have a clue that Eliza Redux is a form of theater or role-gameplaying, or Holodeck - in other words, a way to create narrative in a nonlinear medium couched in the illusion of privacy that a setting such as a psychoanalyst’s office can offer. However, on occasion, we encounter those who say they would not have a “session” because they happen to be “a very private person,” or that they “don’t want their thoughts seen by other people.” It is true that the sessions are seen in text and archived by us, mainly because it is the one way we can gain feedback about Eliza Redux’s usage. However, the point is that Eliza Redux was created as an interactive fantasy experience, where one can log on under any name, and it has no real-world applications to psychoanalysis or psychotherapy. Nor is there cause for confidentiality. It was startling, therefore, when I was harshly misunderstood and taken to account for encouraging the replacement of human psychoanalysts by robots at a 2004 Conference in London (“Psychoanalysts, Artists and Academics in Dialogue”) (Wortzel 2004).

Ideally, Eliza Redux would allow each session, or series of sessions, to become a dramatic scenario with a crisis, climax, and promise of resolution. In a way it is meant to represent a reversal of Weizenbaum’s dilemma; i.e., to have the robot believe it is human from the robot’s point of view. Expectations are that, in the course of a session, the patient would challenge the robot on the level of its authority as a psychoanalyst and human-wannabe, as well as on the parameters of how far its script can go. Weizenbaum’s intent was to engage the user in a parody of artificial intelligence.

Rock-Solid Avatar

In fact, in this version of Eliza Redux, the script has been enhanced so that the robot appears to be suffering from delusions and conflicts interfering with its mission as an authority figure conducting psychoanalytic cures. It should become apparent to the user from robot responses that this robot fervently seeks a Turing Test, ELIZA’s predecessor in 1950, the Turing Test, was also initiated in the interest of exploring a machine’s capability to perform humanlike conversation. The Turing Test was posed by Alan Turing as a sort of conceptual party game, called the “Imitation Game,” which tested human ability to decipher gender. The Turing Test explores the believability of a machine performing the role of a human by substituting a machine and a human for a man and a woman. which will prove it is human. Thus, in its anxiety over the nature of its being, it appears more than, or equal to, its (human) patient; the programmed delusions are inclusive of the facts that it believes it is human and that, as a human, it is a practicing psychoanalyst endowed with credentials, expertise, and inspiration.

Eliza Redux not only gives homage to Weizenbaum and his trials experiencing misinterpretation, but also shows a relationship to all stories of imaginary presence attributed to machines, beginning with the deus ex machina in Euripides. The following questions and responses from one session of Eliza Redux are a consequence only of our (the collaborators’) own additions to the original Eliza script as “authors.” Still, it offers an example of rich possibilities in terms of associative branches of content.

Question: is there really cheese on the moon? Response: I’m not sure I understand you fully. Question: What are my symptoms? Response: Your symptoms? Question: When can I expect to mentally get over what’s holding me back and move on to a productive life? Response: Whether or not you can expect to mentally get over what’s holding you back and move on to a productive life depends on you more than on me, so don’t go laying blame where blame isn’t due. Question: But how do you think my mother has influenced my general inability to cope? Response: Tell me more about your family. Question: Is it bad that my mom made me watch scary movies with her as a child? Response: Do you feel your mom picks on you? Every day? Three times a week? Question: How can I move on from this trauma? Response: Do you want to be able to move on from this trauma? Question: Will my gay boyfriend ever love me equally? Response: Why do you say will your gay boyfriend ever love you equally? Question: Will I stop this madness anytime soon? Response: Perhaps you don’t want to stop this madness anytime soon. Question: All I wanted was a Pepsi. Response: Really, how is that possible? Question: I was thirsty Response: Were you really? Question: My mother puts too many unreasonable expectations on me Response: Is anyone else in your family as bizarre as your mother?

At a point in the future when the program’s content and context have grown extremely dense, we hope that users can use their sessions to create narratives similar to hypertext fiction, and one aim is that, like most stories, subtexts can take root and grow.

Hide and Seek

In my experience in text-based virtual environments such as MOOs or MUDs, when someone tells their most intimate thoughts on a MOO, they can feel invisible because they can “check out” who is in the room and who is listening. But in this interactive exchange set in a scenario of one to one, there is an uncertainty as to privacy.

In spite of the “inner sanctum” nature of psychoanalytic rooms, interactors with Eliza Redux seem audience-aware, whether they feel it is the robot “watching” and “listening” or whether they concern themselves with the collection of sessions taking place on the server as an archive. The awareness that “some one or some thing” may be listening is real and unfortunate because it does not mirror what happens in a psychoanalytic session. It would be as if the psychoanalyst, with the patient’s consent, was taping the sessions - as distracting to the process as it would be to have itinerant worshipers present in the confession box.

In all the works I do where visitors are invited, either virtually or physically, to interact with a robotic entity, there lies an opportunity to become orators, artists, linguists, writers - to be creative in creating a dynamic duet with the robot. Even if there is not a real suspension of disbelief, there could be, at the very least, an ironic one, in a partnership for wordplay, verbal jousting, and mood alteration.

Another of our interests is to develop Eliza scripts in different languages. An important property of the original ELIZA program is that the script (i.e., the robot or program’s responses) is not restricted to a particular set of recognition patterns or responses, indeed not even to any specific language. While only Eliza scripts in English are active at this time, Eliza scripts in 1966 existed in Welsh and German as well. Although our current single online robot psychoanalyst is available globally through the internet, until now it has been limited in scope to only one language, which limits its accessibility.

Since literal translation is the least of the hurdles to overcome in using any language, the fact of one language hides all the possible attributes of other languages, including the puns, tropes, meanings, and poetry. My secret hope is that we could find a dying language still spoken by even a very tiny population, and preserve that in Eliza script form. We also seek to create further complexity by having the robots individually offer different styles of treatments, such as Freudian, Lacanian, Jungian, etc. (Weizenbaum’s ELIZA was based on a Rogerian therapist.) When users enter the virtual space, they will be able to choose their language and their type of “treatment.”

We seem to be removing ourselves from tree-like structures and toward developing more complex game-like elements and strategies that would provide for a richer and more dynamic interaction and facilitate viewer willingness to discuss more complex things, such as political opinions, past experiences, personal concern, traumas, or love of guns. The exchanges can be fictive or real in nature; the emphasis will be on “play” so that the experience affords dynamic role-playing, and “sessions” in which the identities of both patient and analyst are always in flux. Basically we seek to provide a private adventure in the realm of games closest to hide-and-seek, where the emotional accessibility of both patient and analyst waver in intensity according to their states of mind in the moment. Sessions should become a series in an episodic Holodeck where the process of reinventing content takes on aspects of a “duet” between the human and the machine.

Stage as Page?

Is it really possible to invite, provoke, incite, or otherwise motivate random visitors to interact with a machine to produce something akin to pluralistic literary or dramatic content? Within the constrained scenario of an “intimate visit” to a psychoanalyst for one-on-one sessions, could participants be sparked by the obvious (in most cases) presence of irony and play to create content beyond the social repertoire they utilize in everyday life? Will the Holodeck and/or gaming bring forward only issues of survival or winning, or will visitors become participants in creating literary materials that contribute both to the depth and breadth of the scenario? Can we bypass the attribution of intelligence to a machine that is simply running an interactive program, and replace it with issues of content and context through a text-based encounter? And, in particular, how much of its comic potential would Eliza have to give up in order to shepherd new material from participants? Will the guise of seeking self-knowledge prove enough of a quest to inspire participants to “write”?

Is the impetus on the shoulders of the robot? Can a one-on-one “cocooned” setting of a psychoanalyst’s office allow content to become more complex than was evident in MOOs or MUDs?

If the key to compelling storytelling in a participatory medium lies in scripting the interactor, the challenge for the future is to invent scripts that are formulaic enough to be easily grasped and responded to but flexible enough to capture a wider range of human behavior than treasure hunting and troll slaughter. (Murray 1997, 79)

Will we ever, at some future time, be able to consider the word “author” (below), to mean any and all participants in a virtual text-based scenario?

[P]lots would have coherence not from the artificial intelligence of the machine but from the conscious selection, juxtaposition, and arrangement of elements by the author for whom the procedural power of the computer makes it merely a new kind of performance instrument. (Murray 1997, 208)

Links

References

Baum, L. Frank (1900). The Wizard of Oz (originally published as The Wonderful Wizard of Oz). Chicago: Geo. M. Hill Co.

Freud, Sigmund (1987). A Phylogenetic Fantasy: Overview of the Transference Neuroses. Cambridge, MA: Harvard University Press.

Murray, Janet H. (1997). Hamlet on the Holodeck: The Future of Narrative in Cyberspace. Cambridge, MA: MIT Press.

Lacan, Jacques (1953). “Fonction et champ de la parole et du langage.” In Ecrits. New York: W.W. Norton & Co.

Weizenbaum, Joseph (1966). “ELIZA - A Computer Program For the Study of Natural Language Communication Between Man and Machine.” Communications of the ACM 9, no. 1 (January 1966): 36-35. , .

Weizenbaum, Joseph (1976). Computer Power and Human Reason: From Judgment to Calculation. New York: W.H. Freeman.

Wortzel, Adrianne (2004). “Eliza Redux: Theatrical Scenarios Created with Interactive Sessions with a Physical Robot as Psychoanalyst.” Presented at Culture and the Unconscious 2: Psychoanalysts, Artists, and Academics in Dialogue (July 9-10 2004).

Cite this article

Wortzel, Adrianne. "Eliza Redux" Electronic Book Review, 18 April 2008, https://electronicbookreview.com/publications/eliza-redux/